Blended MOOCs

Is the Second Time the Charm?

Students at Bowie State discuss their experience with a MOOC in this video.

Much of the hype surrounding MOOCS has faded and as Steve Kolowich shows in a recent Chronicle piece, “Few people would now be willing to argue that massive open online courses are the future of higher education.” As the Babson Survey Research Group (that Kolowich cites) shows, higher ed leaders are less certain that MOOCs “are a sustainable way to offer courses,” that “self-directed learning” will have an important impact on higher ed, or that “MOOCs are important for institutions to learn about online pedagogy.”

At Ithaka S+R we have been researching MOOCs from a different angle. Last year we published results from a large scale study embedding MOOCs in 14 campus-based courses across the University System of Maryland. Our findings were rather mixed: students had the same outcomes in hybrid courses using MOOCs as those in traditionally-taught sections of the same courses, even though these were newly redesigned courses using new technology and had about a third less class time. As in our previous study with the Online Learning Initiative, we saw no evidence of harm to any subgroups of students, such as those from first generation or low income families. Instructors were very positive about their experiences working with MOOCs and identified numerous benefits both for themselves and for their students.

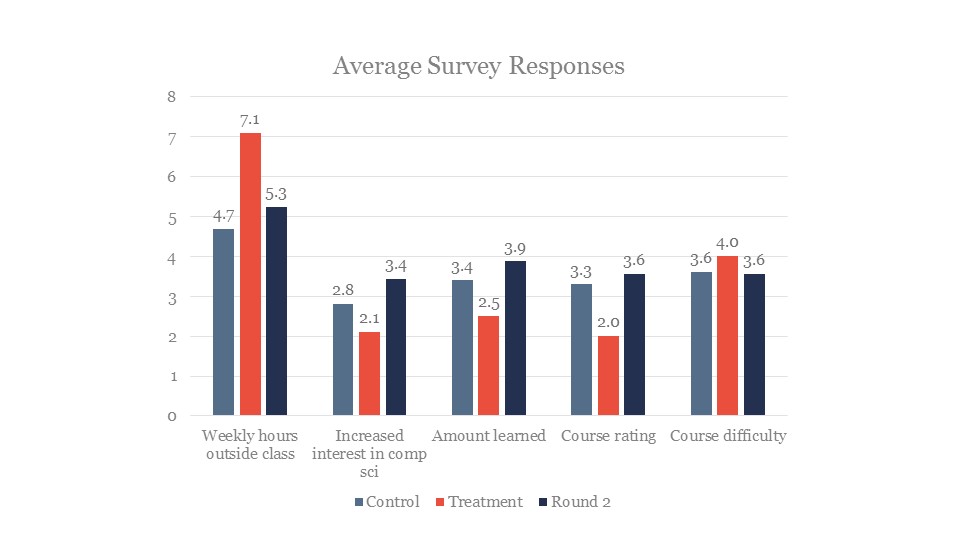

On the other hand, students in the hybrid sections of the side-by-side tests reported significantly lower levels of satisfaction and felt that they learned less than their peers in the traditional sections. Moreover, instructors encountered many implementation challenges and reported that redesigning their courses around MOOCs was very time consuming.

One limitation of the study was that, in all but one case, these findings were based on just one iteration of the newly redesigned courses. In addition, they relied on early versions of MOOCs and on the Coursera platform, neither of which were designed for institutional licensing purposes. So our overall conclusion was that there could be significant value in the models we tested, but that the conditions were not yet in place to make these models viable on a large scale. We hypothesized that, given the opportunity to conduct additional iterations of blended MOOC courses, faculty members might be able to iron out some of the wrinkles we saw the first time around and improve the student experience.

We now have a wisp of evidence to support this hypothesis. In the fall of 2014, a professor at Bowie State University worked with a colleague to repeat the test using a MOOC on Coursera and made one major tweak to the course design. In the first test students had been assigned to work with the MOOC during the first half of the semester, and they reported some of the highest levels of dissatisfaction of all the courses. Instructors found that the MOOC assumed a greater comfort level with terminology and basic computer science principles than was the case for incoming first-year students at this institution. In the second iteration, the faculty members reversed the sequence of the course, moving the MOOC-based modules to the second half of the semester. The instructor introduced the subject, principles and terminology to students in the first few weeks so that they were able to better understand and engage with the MOOC. Students in this iteration reported higher satisfaction levels than either the treatment or control cohorts the year before.

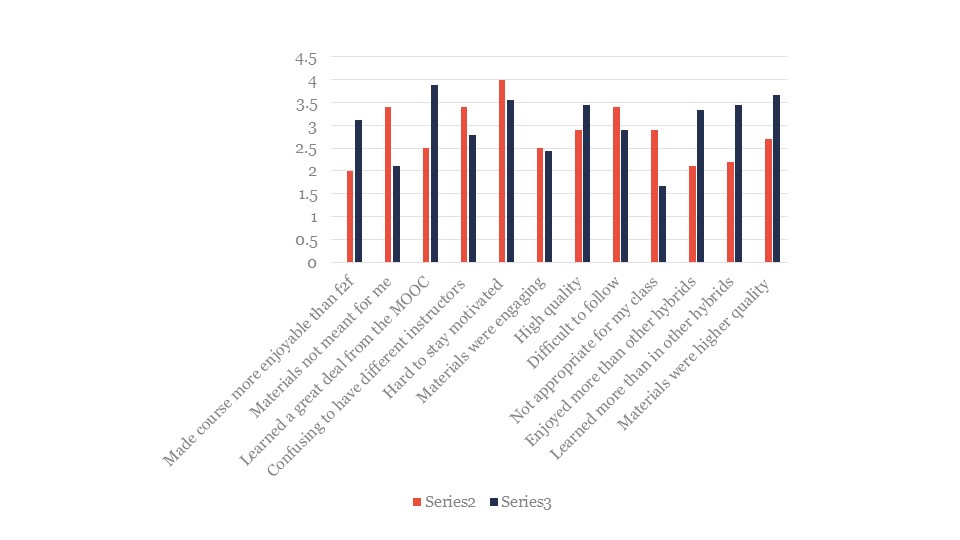

Interviews with students indicated that some found real value in having access to an instructor “twenty-four hours a day.” They found all components of the course to have higher educational value – including in-class activities as well as lecture videos. Their ratings of the MOOC itself were also more positive:

Students also received higher average grades in the second round, but these are hard to interpret given discrepancies in the sequencing of the course and lack of background controls.

The instructor (who had not participated in the first round of tests) was pleased with the way the course went. She found the MOOC to be an improvement over the set of YouTube videos upon which she had relied in the past due to its coherency and integration of assessments with lecture videos. She was pleasantly surprised that students were able to complete a particularly advanced task which she would not normally have assigned at the end of the course. The second iteration of the course also took far less time to plan and deliver, in large part because they used the same version of the MOOC (i.e. not the newer version available on Coursera).

It is essential to note that there were only 18 students in the section, and only nine of them completed the survey (a lower response rate than in the previous year). We did not attempt to control for background characteristics with such a small sample size. The test was run over a far larger student population the year before, with 124 students completing surveys and 177 students enrolled across the two cohorts. This small group of students clearly had a better experience than those in either the treatment or control groups the year before, but there are multiple possible explanations. One is that the student experience can be enhanced with well designed and executed use of these tools, and that additional rounds of tests would produce quantitative evidence of these benefits. (This would come as no surprise to experts in course redesign, who know that it takes a few tries to get a new course format right!) It is also possible that external factors (e.g. instructor quality) accounted for the difference, and we can only know this for sure with additional testing. We are conducting another iteration of a second blended MOOC test this spring, and hope to gain further insight into these questions.