CIC Consortium for Online Humanities Instruction

Evaluation Report for First Course Iteration

-

Table of Contents

- Summary of Findings

- I. Description of evaluation data

- II. Description of courses and participants

- III. Student learning

- IV. Student experience

- VI. Institutional Capacity

- V. Costs

- VI. Overall assessment

- VII. Preparation for next iteration of courses

- Appendix I: Instructor Survey

- Appendix II: Student Survey

- Appendix III: Rubric for Peer Assessment

- Summary of Findings

- I. Description of evaluation data

- II. Description of courses and participants

- III. Student learning

- IV. Student experience

- VI. Institutional Capacity

- V. Costs

- VI. Overall assessment

- VII. Preparation for next iteration of courses

- Appendix I: Instructor Survey

- Appendix II: Student Survey

- Appendix III: Rubric for Peer Assessment

Summary of Findings

This report provides our preliminary analysis of evidence generated from the planning period and first iteration of CIC Consortium courses. It includes a summary of our findings, followed by a description and presentation of a good portion of the data for those interested in delving deeper. It is important to note that these courses finished very recently, and we (like the faculty members involved) are still processing what we have learned. We have amassed a considerable mass of evidence and naturally it does not all point in the same direction. Thus we see these findings as emergent and expect that they will continue to evolve as we digest the evidence.

The CIC Consortium set out to address three goals:

- To provide an opportunity for CIC member institutions to build their capacity for online humanities instruction and share their successes with other liberal arts colleges.

- To explore how online humanities instruction can improve student learning outcomes.

- To determine whether smaller, independent liberal arts institutions can make more effective use of their instructional resources and reduce costs through online humanities instruction.

Our preliminary findings from the first iteration of Consortium courses are as follows.

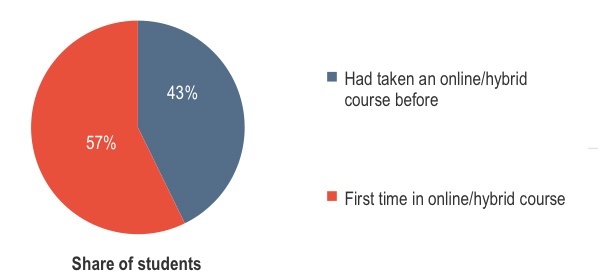

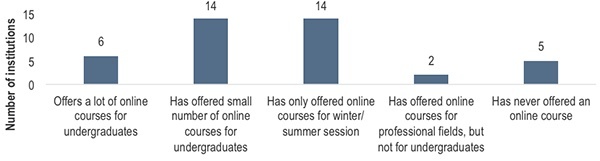

Goal 1: Building Capacity

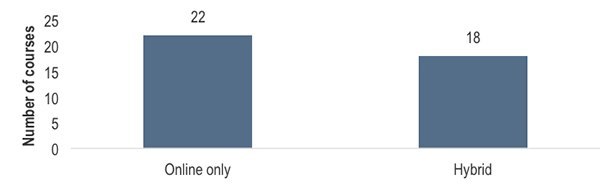

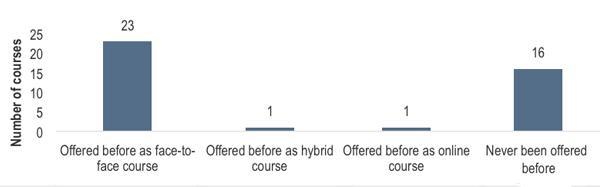

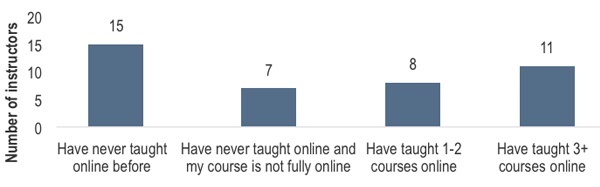

The Consortium has already accomplished a great deal. In the spring semester Consortium members offered 41 online and hybrid courses, of which 23 were online or hybrid versions of existing face-to-face courses and 16 were entirely new. Nearly three quarters of the instructors involved had little or no experience teaching online. Only about one third of Consortium member institutions had significant experience offering online courses to undergraduates. This initiative also provided new learning experiences for students, as 57 percent of those who responded to surveys had never taken an online or hybrid course before this semester.

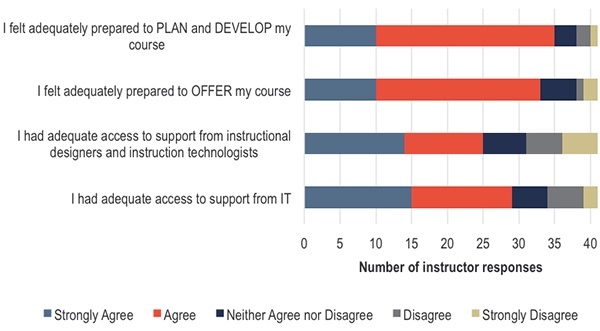

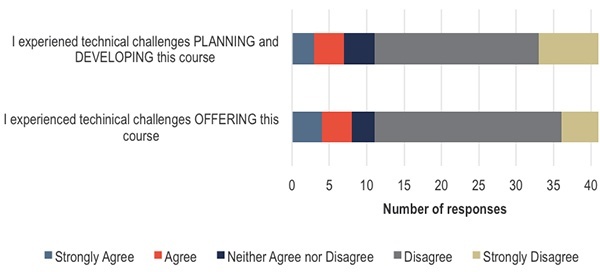

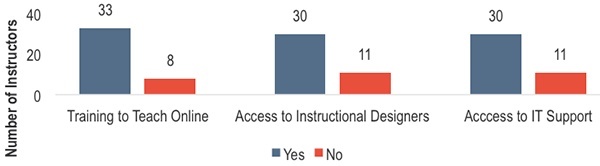

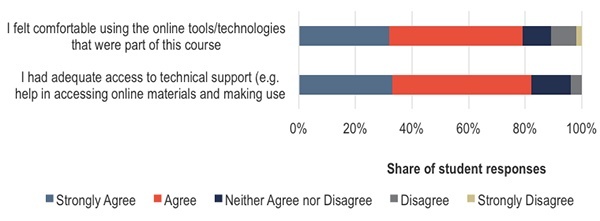

While many instructors reported experiencing technical challenges at some point, the vast majority felt that they had adequate support for developing and delivering these courses. Eighty percent of instructors said they received training to teach online (at least in one case through another Consortium institution) and 75 percent said they had access to instructional designers to help them teach their courses. Over 80 percent of instructors indicated that they felt adequately prepared to plan, prepare and deliver their courses. Along similar lines, 80 percent of students indicated that they had adequate access to technical support.

We were struck by the wide range of technologies used by instructors in their courses. They relied heavily on features available through standard learning management systems such as Blackboard, Canvas and Moodle, with Canvas receiving especially positive reviews. They also incorporated many less familiar tools such as BlueJean, zoom, Voicethread, madmagz, Big Tent social media site, Dipity, ThinkLink and Digication, not to mention commonly used sites such as Skype, Spotify, WordPress, YouTube and Twitter. Many instructors used Google applications for collaborative student work and generally found these to work well (aside from Hangout, which got mixed reviews). Faculty members also incorporated use of domain-specific online resources such as LitGenius.

These findings indicate that most Consortium members are able to marshal the resources necessary to support online instruction, even in institutions with relatively little experience providing undergraduate courses online.

Goal 2: Enhancing Student Learning

Because student learning is so central, we will examine this goal at length and in several parts: did students achieve the intended learning outcomes for their courses? Did the courses succeed in fostering student engagement and building a strong sense of community? And lastly, how did student learning in the online/hybrid courses compare to learning in traditional face-to-face instruction?

Did students achieve the intended learning outcomes for their courses?

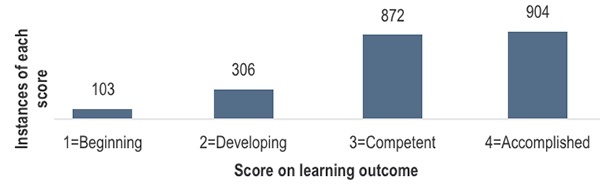

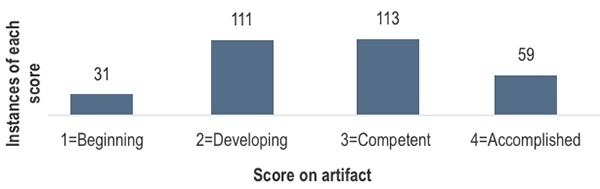

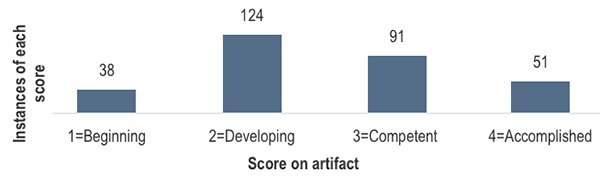

The answer to this question appears to be, for the most part, “yes.” Instructors gave their students high scores on the learning objectives they identified, with an average of 3.17 on a four-point scale (representing Beginning, Developing, Competent, and Accomplished). Interestingly, peer assessors brought a more critical eye to student artifacts, awarding an average score of only 2.61 (meaning that on average students demonstrated achievement at a level between Developing and Competent). In instructor and students surveys, perceptions of student learning were quite positive, particularly in terms of students’ intellectual engagement and achievement.

In survey open fields, many instructors commented favorably on the quality of student work. For example, one wrote:

Never having taught an online course, I was not sure if I would be able to create the kind of interactive experience for which I was hoping. The depth and range of the students’ discussion contributions and written work, however, was far beyond my expectations (for those who did in fact participate). I was very pleased with the end result.[1]

Several students also said they benefited from taking these courses and particularly enjoyed being introduced to new ways of learning and collaborating. One student wrote:

It was fun and interactive, I learned a lot and being online was actually really helpful because we could easily pull something from the internet to help understand what is going on or to get more information about a subject/author.

On the other hand, several faculty members commented that their students did not seem to have “bought into” the online components of their courses and were resistant to engaging with them. We have seen this dynamic in past research, particularly when the online and face-to-face components of a course are not well integrated and when students do not understand the rationale behind inclusion of the online part.

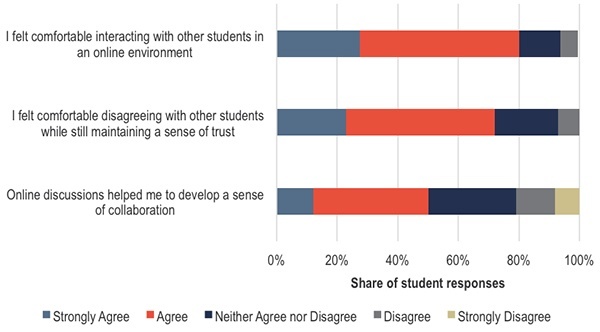

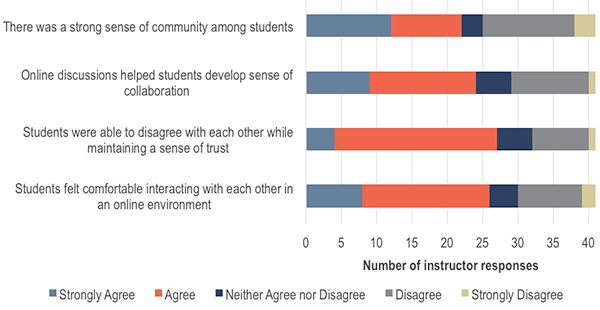

Did the courses succeed in fostering student engagement and building a strong sense of community?

Both instructors and students described some dissatisfaction with the level of social engagement and sense of community among students. While a few students felt more comfortable expressing opinions and interacting via asynchronous, text-based discussions, many more said they missed face-to-face interaction – particularly with other students.

A student commented:

It was hard to establish relationships with the other students because everything felt removed. Not being able to interact in person really hindered the community feeling that typically is established in a traditional classroom.

There were many student comments of this nature. It is important to note, however, that this experience was not at all universal: a number of students commented favorably about the online format. For example, a student wrote:

I enjoyed the hybrid format. It allowed us to voice opinions which me might not say in person (while being respectful, of course.)

Likewise, several instructors were encouraged by the level of interaction in their courses. One wrote:

I didn’t expect the students to form a sense of community so easily; they were genuinely interested in each other and that helped to bridge the communications divide.

Another commented:

The excellent exchange of perspectives in the online discussions was a sharp contrast to the sometimes less-than-dynamic discussions we had F2F in class. However, I was pleasantly surprised by the extent of the students’ learning reflected in their individual final projects.

The online interaction between professors and students was also challenging for some, particularly when dealing with less motivated students. One professor reported feeling “shell-shocked” by the lack of engagement with students in the online format. Another wrote:

I found it very hard to reach out to students who were not doing well in the class. My students seemed to either “get it” and thrive, or be pretty MIA.

An instructor stated:

…Some students (who I had taught previously) who are quiet/silent in f2f courses were really able to share their ideas better in the online environment. On the other hand, other students were almost completely checked-out of the course, and I found it very difficult to help them re-engage.

Students also expressed some dissatisfaction with the lack of personal interaction with their professors. One wrote:

I think I would have benefited from more in-person instruction, and I didn’t feel very connected with the professor.

In order to build social engagement, instructors relied heavily on discussion boards, with mixed results. Some instructors were very pleased with the quality of student comments in discussion boards, while others found it difficult to stimulate active engagement. A number of instructors sited this as an area for improvement in the second iteration of the course. One wrote:

Overall, the discussions were not as in-depth as I would have liked …In retrospect, I think my discussion prompts and assignments for the online interaction should have been far more specific and problem/project-based than open-ended and exploratory…

Students gave relatively low ratings to the value of discussion boards in helping them to understand others’ perspectives and in developing a sense of collaboration, also indicating room for improvement.

Another prevalent approach to building community was use of synchronous technologies such as Google Hangouts, Skype and Adobe Connect. One professor wrote: “We met synchronously, which was very important for building community and a sense of trust.” Quite a few, however, were frustrated by technical issues, and a couple reported feeling disappointed that students did not take advantage of opportunities for online office hours.

How did student learning in the online/hybrid courses compare to that in traditional face-to-face instruction?

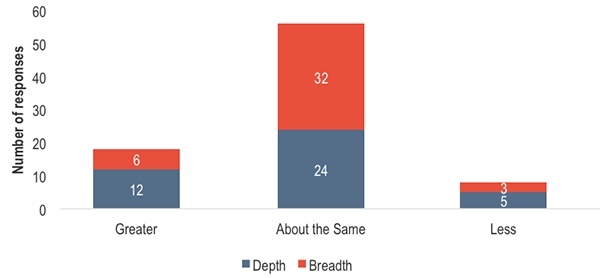

This is perhaps the hardest question to answer, since we do not have comparable data from traditional courses. The instructor scores of learning outcomes indicate that students met their goals for their respective courses, and survey responses from instructors also provide favorable comparisons for the online format. While the majority of instructors believed that the depth and breadth of student learning was about the same in online/hybrid courses as in traditionally taught ones, a greater number of instructors felt that students learned more in the online/hybrid courses than felt they learned less, particularly in terms of depth. One instructor wrote:

I think approaches that delivered content material worked especially well: videos, websites, readings, lectures. I think student learning of content material is greater in the online asynchronous format than from face -to-face instruction.

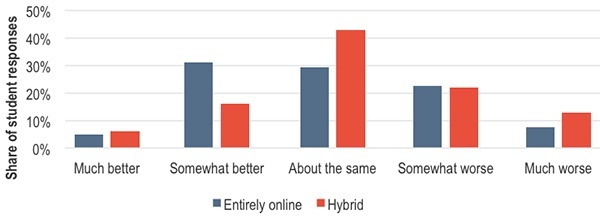

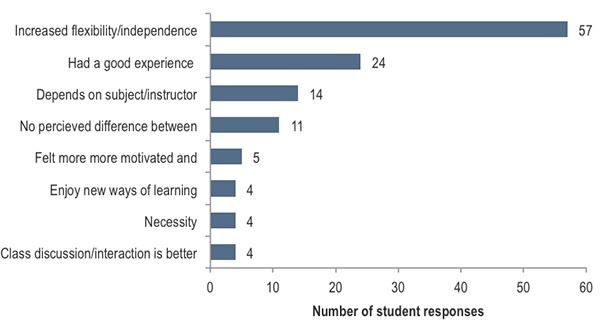

Students were almost evenly split in their views on how online/hybrid courses compare to traditionally-taught ones: roughly one third rated them are better, one third rated them worse, and one third rated them about the same. Students who ranked online hybrid/courses better cited a number of reasons for their response, the most common of which was the increased flexibility that online/hybrid courses afforded. One student wrote:

The flexibility was far more suited to my schedule than a traditional class. The various mediums used to teach were extremely helpful and were things that I don’t normally use in a traditional course (online videos, audio clips, websites).

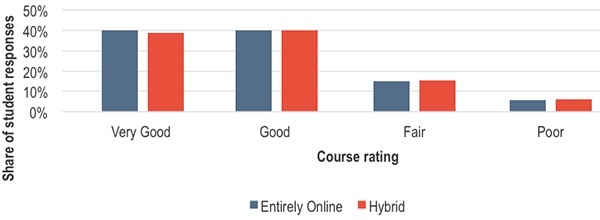

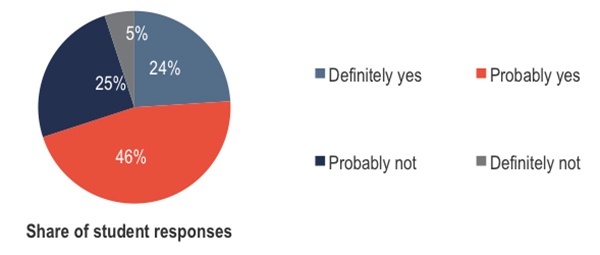

While students were split in their comparisons of online/hybrid courses to face-to-face courses, responses to other survey questions suggest more positive perceptions: 79 percent of students rated CIC Consortium courses as Good or Very Good, and 70 percent said they would probably or definitely take another online/hybrid course in the future.

We saw evidence that student learning can be enhanced through online instruction when technologies are used in service of pedagogical strategies or the online format creates flexibility for different approaches. Professors described a number of positive effects related to online instruction. One wrote:

It forced me to reevaluate how I teach, including assessment methods and more creative ways to engage students with material. I would also like to think it also helped put the spark of discovery back into the classroom. Presented with the right sources and supplementary materials, students are forced to find their own way through the material without being told at every turn what it all means (which a face-to-face lecture can easily devolve into), and as a result they become seekers rather than better note takers.

Another:

Experiential learning worked well, students went on several field trips to explore Caribbean culture in Boston, they learned to work independently, they did a lot of research and handed in original presentations and analytic essays.

And another:

I like using multimedia so easily. I spend a lot of time planning my slides and the content (more than in a face-to-face class because I can’t wing it as easily online). But, as in a face-to-face class, we always find interesting connections and online we could post links to videos or photos, etc. to answer questions as they arose and explore side issues that were of great interest to the class. It’s student centered and THEY are often the experts, finding resources to share with me and I love learning from them.

And another:

Doing the course as hybrid made me do more careful and creative planning than usual. I incorporated many more digital resources, which enhanced learning and helped us make older texts (like Robinson Crusoe) more current. The hybrid format was a revelation to me in terms of connecting texts from my field (18th-century studies) to contemporary responses / retellings of those texts.

Students also found value in new teaching methods and mediums. One wrote:

[This course] was set up in such a way that I actually felt like I got to experience the course in more dimensions than I would have in a more traditional course setting. There were tons of opportunities for interactions with other students in a variety of mediums, and always some way to participate.

Several strategies emerged as producing particularly good results, such as use of short videos/mini-lectures and assigned blogs or learning logs. A professor wrote:

Students developed a respect for process, through daily writing. The daily writing also allowed them to work through the theme of the course through writing.

Another wrote:

Helping students draft and write digital narratives–building on my traditional role as a coach and editor for student writers–worked very well for student blogging.

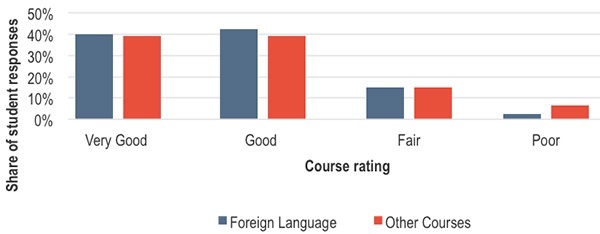

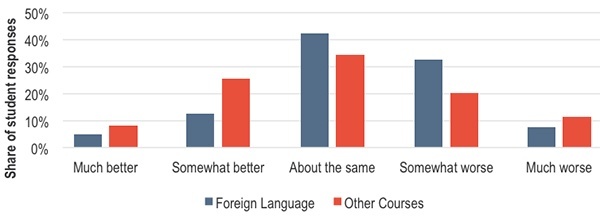

Taking into account all these data, we observed a number of benefits of online instruction for student learning and gained some assurances. We did not find clear patterns of differences between fully online and hybrid courses, or between courses taught in English versus foreign languages.[2] There is also no indication that online courses demanded less of students. If anything, students found that succeeding in an online course required more work (as long as they remained engaged). The number of faculty members who reported positive experiences far outnumbered those who reported poor ones.

On the other hand, the loss of personal interaction with instructors and among students should not be discounted. In some cases lack of interaction was associated with small class size or a particularly disengaged group of students, which could also be problematic in a traditional format. As instructors gain experience, it is likely that engagement can be improved through more effective use of technology and different pedagogical strategies. Still, there may be a trade-off between students’ intellectual development and progress and their social development. Moreover, there may be some instructors and students for whom this format will never be optimal.

Goal 3: Increasing Efficiency

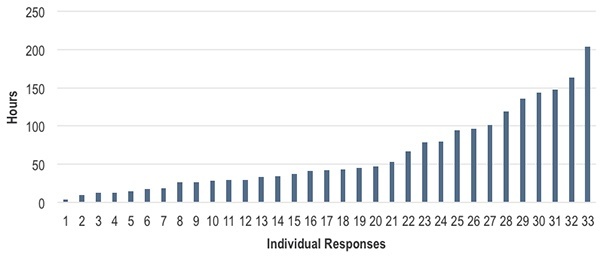

The potential for cost efficiencies is an important consideration and goal of this initiative. Based on our analysis of timesheets and instructor surveys, it appears that any eventual economic benefits will derive from sharing of courses across the Consortium, not from instructor time savings in teaching them. If anything, online and hybrid courses entail additional start-up costs in terms of faculty planning time and support costs, not to mention technology infrastructure. Instructors may well save time in the second iteration of offering these courses, but evidence from the first iteration of courses does not indicate that instructors themselves grow more efficient in planning and delivering online courses as they gain experience.

Consistent with other research, we saw no indication that online instructions saves time for faculty; if anything, the opposite is true. A professor commented:

My workload was far more extensive than in a classroom course, and I was in a constant fear of falling behind. Similarly, I felt that it took an immense, constant effort on my part to fuel and energize the course – even to establish my ‘presence.’

Another wrote:

I spent more time on course administration than I would in a traditional course. I suppose that this is just a transference of the traditional classroom management issues to a different domain, but the technology made some parts of the course less, not more, efficient. I have in mind grading, trying to do pair or group work, moving around in the classroom.

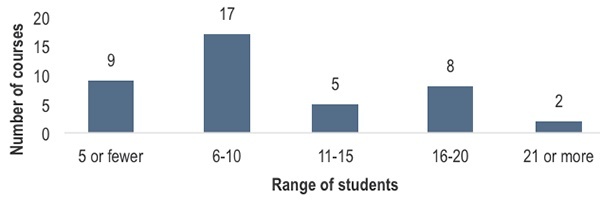

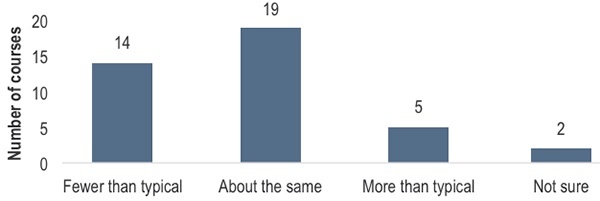

What is clear is that there is room for enhanced efficiency through increased enrollment in upper division humanities courses. 65 percent of the CIC Consortium courses had ten or fewer students, and nearly a quarter had less than six students. Evidence does not suggest, however, that making courses available online will generate more demand within an institution, as most instructors said their courses had roughly the same or fewer students than a comparable face-to-face course. In order to reduce cost per student, new enrollments would need to come from students at other institutions. Yet Consortium members cannot all be net importers of students – there will need to be a “balance of trade” predicated on respective program strengths.

The first iteration of courses was largely confined to individual institutions, but the peer assessment exercise provides assurance that there is a reasonable level of consistency of courses across institutions. Peer assessors reported that they did not observe substantial differences in the quality of student work across institutions.[3] Indeed, they were more struck by the differences in approach among instructors even within the same institution.[4]

Preliminary conclusion

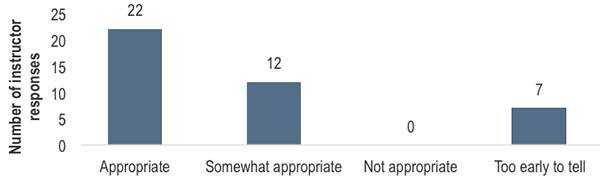

Our preliminary conclusion … is that online learning can be an appropriate format for delivering upper division humanities courses, particularly when tied to specific aims.

Our preliminary conclusion from the first iteration of courses is that online learning can be an appropriate format for delivering upper division humanities courses, particularly when tied to specific aims, such as increased experiential learning, allowing students to continue coursework while studying abroad, and enabling cross-enrollment with other institutions. Evidence from the first iteration of courses indicates that online instruction can be implemented successfully and, under the right circumstances, in ways that are consistent with the mission and goals of liberal arts institutions. The next iteration of these courses will provide an opportunity to further interrogate these findings and, in particular, to investigate the feasibility of cross-enrollment within the CIC Consortium.

I. Description of evaluation data

The CIC Consortium evaluation consists of five types of data

Instructor survey [N=41]: this survey was administered at the end of the spring term. Sections related to student experience in the online course were derived from the Community of Inquiry survey instrument, which focuses on three constructs: instructor presence, social presence, and cognitive presence.[5]

Instructor timesheets [N=39]. These were retrieved at two points in time: the end of the planning stage (January), and the end of the spring semester (June). Instructors could continue to categorize time as course planning and design during the spring semester.

Student surveys [N=209] Surveys were submitted for 32 courses. Each course had between 1 and 14 surveys, with an average of six surveys per course. Surveys were distributed fairly evenly across course type: 108 came from entirely online courses, 101 came from hybrid courses. Sections of the student survey also derived from Community of Inquiry survey instrument and have a number of similar items as the instructor survey. Instructors had the option of administering surveys locally or by Ithaka S+R.

Instructor scores on learning outcomes [N=376 students; 2,186 scores]. These are scores on a four-point scale [Beginning, Developing, Competent, Accomplished] for the learning objectives identified by each instructor for his/her own course.

Peer assessment scores [N=174 artifacts, 319 scores]. These are scores on the same four-point scale using the collaboratively developed rubric of two learning outcomes common to upper division humanities courses. An alternate version of the rubric was available for coursework done in foreign languages. All English-language student artifacts were reviewed by two peer assessors. Foreign language artifacts were reviewed by single assessors, in most cases colleagues from instructors’ respective departments. Rubrics are attached in Appendix A.

Limitations

- There is no standardized assessment that met the Consortium’s needs for the types of learning outcomes expected of upper division humanities courses. Consequently, there was no objective, widely accepted way to measure or compare student learning in Consortium course with that of traditionally taught courses.

- It was not possible to implement the methods used to measure student learning in Consortium courses in comparable traditionally taught courses. Our judgment of the comparison between the quality of student learning in online/hybrid courses and traditionally taught ones is thus based primarily on subjective assessments from instructors triangulated with other data sources. Peer assessments of student artifacts provide an objective measure of student learning, but again we lack a baseline for comparison.

- We do not have any student survey responses for one fifth of the courses, and very few for others.

- Our cost data is mostly limited to instructor time use. We have some sense of the demands placed on support units instructor time sheets and surveys, but these do not capture costs such as infrastructure and bandwidth.

II. Description of courses and participants

How many courses were offered through the Consortium?

Were these new or existing courses?

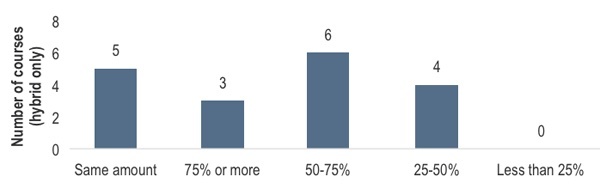

How much face-to-face time did hybrid Consortium courses have compared to traditional courses?

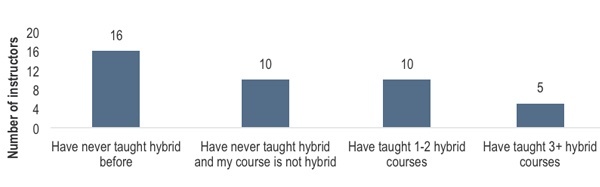

What experience did instructors have teaching online before the spring semester?

What experience did instructors have teaching hybrid courses before the spring semester?

How many students were exposed to online/hybrid courses for the first time through the Consortium?

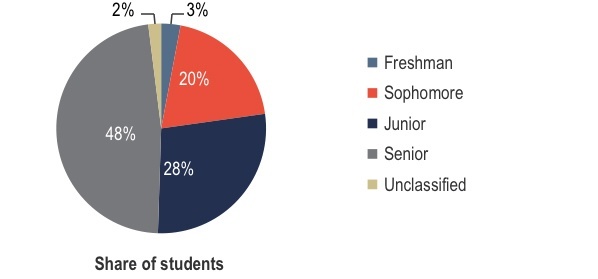

What year in college were these students?

How many students enrolled in each of the Consortium courses?

How does this compare to a typical course of this nature?

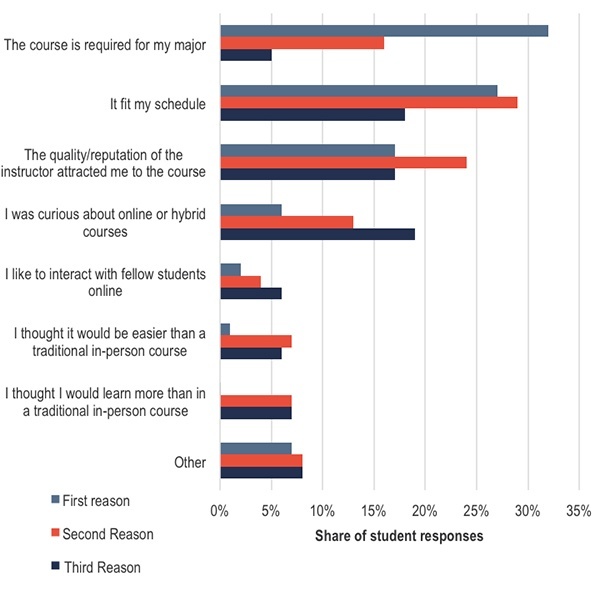

Why did students choose to enroll in these courses?

III. Student learning

Did instructors find that students achieved the desired learning outcomes for their courses?

Based on peer assessment of artifacts, how many students were able to interpret meaning in their assignments?

Based on peer assessment of artifacts, how many students were able to synthesize knowledge in their assignments?

What was students’ perception of their social presence?

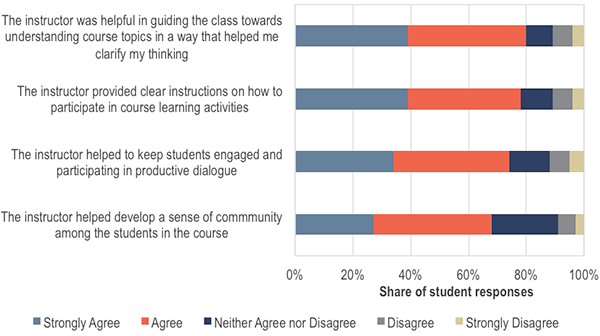

What was students’ perception of instructor presence in their courses?

How did instructors perceive students’ social presence in their courses?

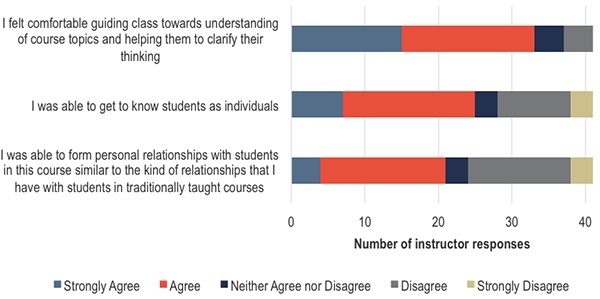

How did instructors perceive their own presence in their courses?

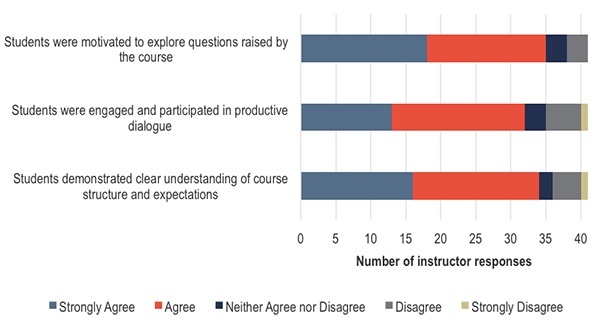

How did instructors perceive students’ cognitive presence in their courses?[6]

How did student learning in the online/hybrid courses compare to student learning in traditional face-to-face instructional settings?

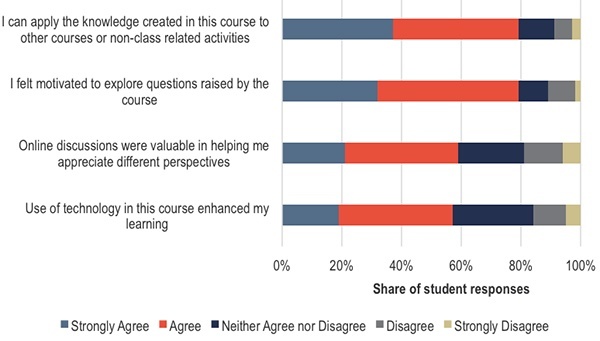

How did students perceive their own cognitive presence in their courses?

IV. Student experience

How did students rate the courses overall?

Did students rank foreign language courses differently?

How did students compare this course to a traditional in-person course?

How did foreign language courses compare to traditional in-person courses?

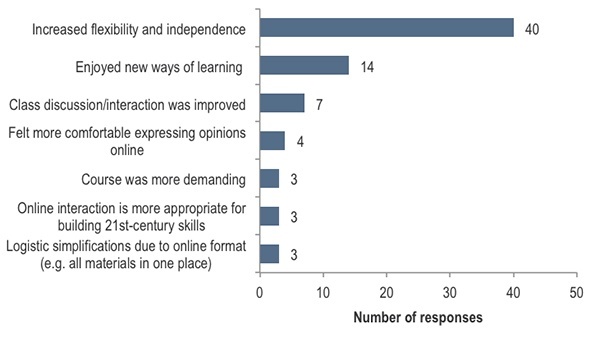

What did students like about this format?

Of those students who left a comment explaining why they rated their hybrid/online course as “better” or “much better” than a traditional in-person course, the largest shares cited the following reasons for their choice:

*Based on coded student comments. Responses could be coded in more than one category.

**Larger shares of students in entirely online courses cited flexibility as a reason for their choice (32 out of 40).

Example responses

Increased flexibility and independence:

I think that online was better because I was able to fit it in whenever I had time, instead of trying to schedule my life around it.

I was able to go at my own pace to study things that were of interest to me, with adequate time to ask questions and get feedback from both students and the instructor.

Enjoyed new ways of learning:

[The professor] does an amazing job of explaining the material through his PowerPoints and videos, whereas it is often difficult to grasp new material through solely an in-class lecture. Students are encouraged to watch the videos and ask questions.

Class discussion/interaction was improved:

The discussions really forced us to think for our own. In an in-person class it’s easy for one person to guide the discussion but in the online format it allowed all of us to have an equal say.

Felt more comfortable expressing opinions online:

I’m somewhat of a quiet person, so I expressed myself through writing more than I ever would have in a traditional course.

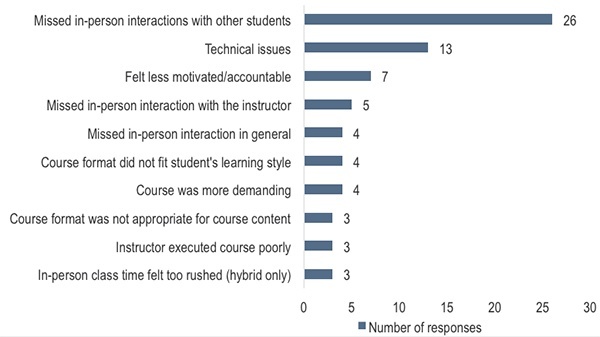

What did students dislike about this format?

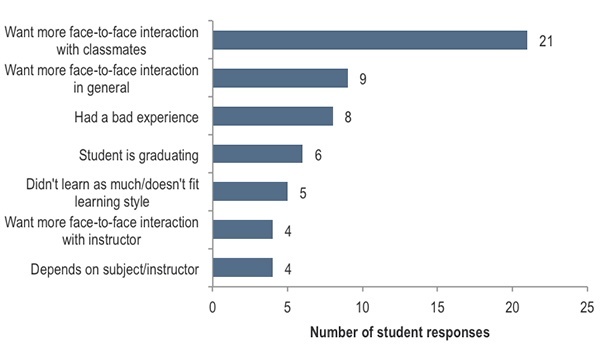

Of those students who left a comment explaining why they rated their online course as “worse” or “much worse” than a traditional in-person course, the largest shares cited the following reasons for their choice:

Example Responses

Missed in-person interactions with other students:

It was hard to establish relationships with the other students because everything felt removed. Not being able to interact in person really hindered the community feeling that typically is established in a traditional classroom. Because it was online, it was easy to get distracted during the course.

Missed in-person interactions with professor:

I think I would have benefited from more in-person instruction, and I didn’t feel very connected with the professor.

Felt less motivated/accountable:

It is easier to fall off the radar in a course like this. It is also easier to make this course an afterthought.

Course format did not fit student’s learning style:

The course itself was fine, and well setup. I think that I personally just learn better through verbal processing and discussion, and I didn’t take into account that I wouldn’t be able to do that in an online course.

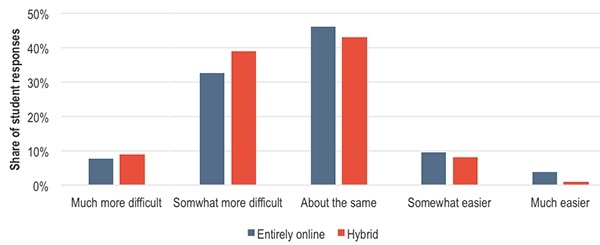

How did students rate the degree of difficulty for their online courses compared to other upper-level humanities courses?

VI. Institutional Capacity

What experience did Consortium members have offering online courses?

Did instructors feel they had adequate support to plan and teach online/hybrid courses?

Did instructors experience technical challenges offering, planning, or developing this course?

Did instructors have access to training, instructional designers and IT support?

Did students have access to technical support, feel comfortable using the online tools?

V. Costs

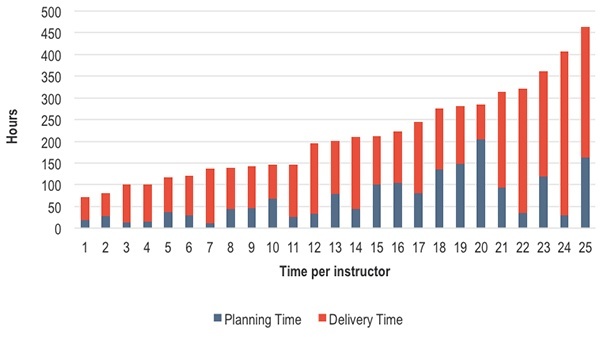

How much time did it take faculty to plan their courses?

What did faculty spend time on when planning their courses?

| Average | Median | 10th Percentile | 90th Percentile | |

|---|---|---|---|---|

| New Course Content Design and Creation | 43.6 hours | 24 | 2.3 | 110.5 |

| Start-up Activities | 8.1 hours | 5 | 0 | 22.5 |

| Dealing with Copyright Issues | 1.4 hours | 0 | 0 | 5.5 |

| Unusual Administrative Activities | 2.5 hours | 0 | 0 | 4.5 |

| Administrative Planning for Consortium Scale-Up | .9 hours | 0 | 0 | 4 |

| Course Planning for Consortium Scale-Up | 3.4 hours | 0 | 0 | 14 |

| Total Time Planning | 64.8 hours | 43.5 | 13.7 | 146.1 |

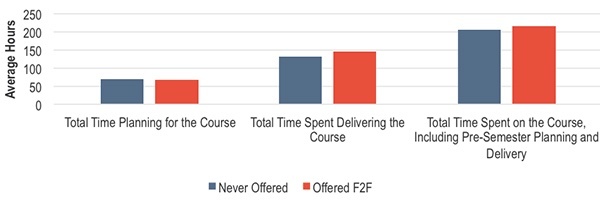

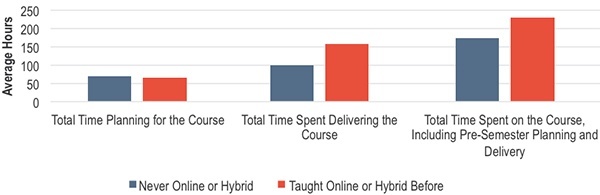

Overall, how much time did faculty spend on planning and delivering their courses?

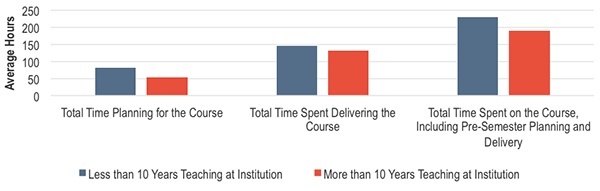

Does the time spent teaching vary by a professor’s experience at his or her current institution?

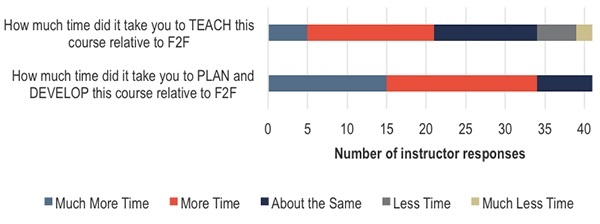

How did time spent on these courses compare to time spent teaching a traditional course?

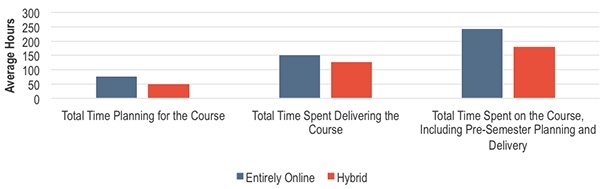

How did time spent vary by course format?

How much did faculty time vary by whether or not the course was being offered for the first time?

How much did faculty time vary by whether or not the faculty member had taught any course in an online or hybrid format before?

VI. Overall assessment

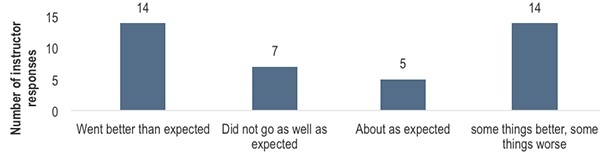

Overall, did Consortium courses go as expected?

Do instructors believe that the online/hybrid format is appropriate for teaching advanced humanities content?

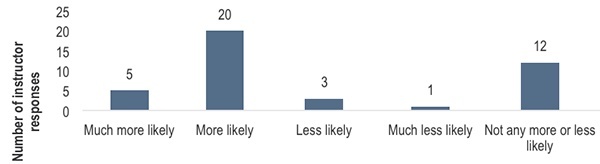

Are instructors more or less likely to encourage colleagues to teach online as a result of this experience?

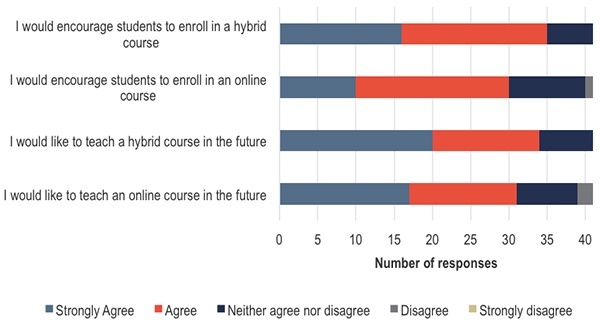

After this experience, what are instructors’ attitudes towards online teaching and learning?

Do students say they would take another online/hybrid course in the future?

Why would students take an online or hybrid course again?

Of those students who left a comment explaining why they responded “definitely yes” or “probably yes” when asked if they would take another online or hybrid course, the largest shares cited the following reasons for their choice:

Example Responses

Increased flexibility/independence:

The flexibility in schedule is very helpful since I am a working adult student. It allows me to do the online activities in my own time.

I like being able to choose when throughout my day I can do the material for the course. Also I feel like I learned the material better with less effort, because I was focused during the learning process and would take a break from the material.

Had a good experience:

This was a very positive experience for me, and I have at least a willingness to take another such course. I don’t think that the format would be my preference in every set of circumstances, but this particular scenario showed me that hybrid courses can go very well.

Depends on subject/instructor:

Yes I would, as long as the professor was as adaptable as [the one I had for this course].

Why would students not take an online/hybrid course again?

Of those students who left a comment explaining why they responded “definitely not” or “probably not” when asked if they would take another online or hybrid course, the largest shares cited the following reasons for their choice:

Example Responses

Want more face-to-face interaction:

I really enjoy face to face discussions, especially in upper level seminars. I think in this way, the class can create deeper, more engaging hypotheses and reach an understanding to a higher degree than in the online classroom setting.

Had a bad experience:

I would not take another hybrid English course because of too many issues that I experienced with this hybrid course

VII. Preparation for next iteration of courses

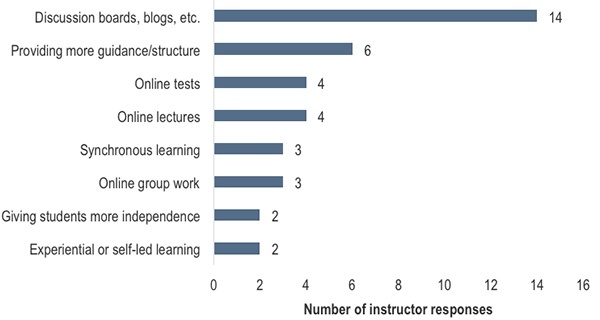

What did instructors find worked well?

Example responses

Discussion Boards, blogs, etc.:

The forum postings and responses on the readings. Students typically were more detailed in their responses to the texts and to one another than is usually the case in F2F class discussions.

Providing more guidance/structure:

Clarity and structure. My students needed (and appreciated) much more guidance and guidelines regarding assignments, discussions, etc. than in a f2f course.

Experiential or self-led learning:

Experiential learning worked well, students went on several field trips to explore Caribbean culture in Boston, they learned to work independently, they did a lot of research and handed in original presentations and analytic essays.

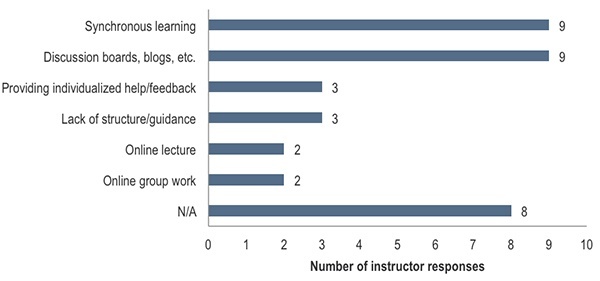

What did instructors find didn’t work well?

Example Responses

Synchronous learning:

I attempted to hold online, synchronous office hours for the day after a new unit was posted, but few students participated. I had been hoping for this to be a substitute for students stopping after class, but it did not work.

Providing individualized help/feedback:

I found it very hard to reach out to students who were not doing well in the class. My students seemed to either ‘get it’ and thrive, or be pretty MIA.

Lack of structure/guidance:

In retrospect, I think my discussion prompts and assignments for the online interaction should have been far more specific and problem/project-based than open-ended and exploratory.

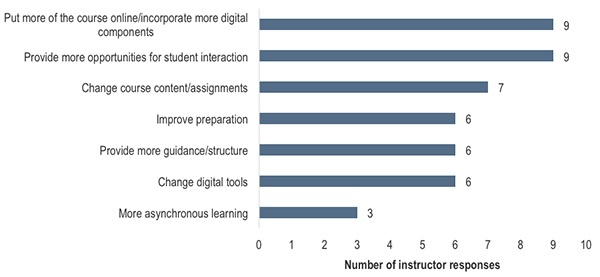

What will instructors do differently next time?

Example Responses

Put more of the course online/incorporate more digital components:

I am probably going to change some of the assignments, and utilize more technological applications to vary and increase student interaction. I am going to change the course container from solely Blackboard to additional use of Google Community. I may make other changes as well.

Provide more opportunities for student interaction:

I would add more interaction between the students (they had to work with partners on several occasions during the semester but I feel like we didn’t interact as a class), maybe using the discussion board more.

Change course content/assignments:

I will require three-four books instead of two for the class and use less supplementary reading materials. I also need to rethink the experiential component, which was a huge hit for the kids this semester.

Improve preparation:

I got behind in my preparation this time and was, a couple of times, just a few days ahead of the students in terms of readings, providing feedback, and even uploading material for discussion. With my basic Power Points now all created, I can change a few things, create a few more presentations, and be more on top of things the next time around.

Provide more guidance/structure:

More structured online conversations, more structured group assignments, more (but shorter) video mini-lectures before class.

Change digital tools:

I would find a better video conferencing platform with no time lag issues and better sound quality. I would also create gravity forms that would allow me to correct tests online rather than printing the online tests and making corrections on a hard copy.

More asynchronous learning:

I am playing with thoughts of more asynchronous sessions (only did one a month or so this semester and I treasure the conversations and connections so I am still hesitant about this).

Appendix I: Instructor Survey

Instructor Survey Instrument

Dear Consortium Colleague,

Thank you for taking the time to complete this survey. All questions in this survey refer to the course you taught this semester as part of the Consortium for Online Humanities Instruction. While we have pieces of this information from various sources (proposals, interviews, etc.), this survey will ensure that we have comprehensive information about all the participants’ courses and backgrounds. This will enable us to assess the impact of institutional and background factors on your experiences teaching online. We also wish to learn about your experiences and observations as a result of teaching your course. This survey should take about 30-40 minutes of your time to complete. If you wish to pause while filling out the survey, your work will be saved and you can return to it later.

Background

- What is your institutional affiliation? ___________

- How many years have you been teaching at your institution? __________

- What is your primary departmental affiliation? ______________

- What is the name and number of your course? ______________

- What is the primary format for your course this semester?

- My course is entirely online

- My course is hybrid

- Please select the response that best describes your institution’s experience with online courses:

- My institution offers a lot of online courses for undergraduates.

- My institution has offered a small number of online courses for undergraduate students in the past.

- My institution has only offered online courses for undergraduates during winter and/or summer sessions in the past.

- My institution has offered online courses in some professional fields, but none designed for undergraduates.

- My institution has never offered an online course.

- What is your experience teaching online?

- I have never taught online before this semester.

- I have never taught online and my course this semester is not fully online

- I have taught 1-2 courses online before this semester.

- I have taught three or more online courses before this semester.

- What is your experience teaching hybrid courses (i.e. courses that combine both online and face-to-face components)?

- I have never taught a hybrid course before this semester.

- I have never taught a hybrid course and my course this semester is not a hybrid.

- I have taught 1-2 hybrid courses before this semester.

- I have taught three or more hybrid courses before this semester.

- [If entirely online, skip to #9] How much face-to-face class time does your course have?

- My course has the same amount of class time as a traditional course.

- My course has 75% or more of the class time of a traditional course.

- My course has 50-75% of the class time of a traditional course.

- My course has 25-50% of the class time of a traditional course.

- My course has 25% or less of the class time of a traditional course.

- Other (please describe) _________________

- Has the course you taught as part of the Consortium been offered before?

- This course has been offered before as a face-to-face course.

- This course has been offered before as a hybrid course.

- This course has been offered before as an online course.

- This course has never been offered before.

- What kinds of modifications did you make to your course for this semester?

- I created a new course from scratch.

- I modified an existing face-to-face course to make it a hybrid/online course.

- I enhanced an existing online/hybrid course.

- Other (please describe)_______________

- How many students enrolled in your course this semester (final enrollment, after drops and adds)?

- Five or fewer

- 6-10

- 11-15

- 16-20

- 21 or more

- How does the number of students who enrolled this semester compare to the typical enrollment for a course of this nature at your institution?

- Fewer students enrolled in this course than typically do for a traditionally taught course of this nature.

- About the same number of students enrolled in this course.

- More students enrolled in this course than typically do for a course of this nature.

- I am not sure

Preparation and support

- Did you participate in any kind of training to teach online? [yes/no]

- [if yes] Please describe the training you received before teaching this course. For example, who provided the training? What was the duration in terms of hours or weeks? ______________

- Did you have access to instructional designers and/or instructional technologists at your institution to help you design and build your course? [yes/no]

- [if yes] Please estimate how many hours of instructional designer/instructional technologists’ time you used to plan and build this course. ______________

- Did you have access to IT support to design, build, and/or manage your course?

- [If yes] please estimate how many hours of IT staff time you used for this course. ______________

- Please indicate the extent to which you agree with each statement

| Strongly disagree | Disagree | Neither agree nor disagree | Agree | Strongly agree | |

| I felt adequately prepared to plan and develop my online/hybrid course this semester. | |||||

| I felt adequately prepared to offer my online/hybrid course this semester. | |||||

| I had adequate access to support from instructional designers and/or instructional technologists for this course. | |||||

| I had adequate access to support from IT for this course. | |||||

| I experienced significant technical challenges planning and/or developing my course. | |||||

| I experienced significant technical challenges offering my course. |

- How much time did it take to plan and develop this course relative to a comparable face-to-face course?

- Much less time

- Less time

- About the same time

- More time

- Much more time

- How much time did it take to teach this course relative to a comparable face-to-face course?

- Much less time

- Less time

- About the same time

- More time

- Much more time

Student Learning / Experience

- Please select the statement that best fits your sense of the depth of student learning in this course:

- The depth of student learning in this course was greater than in most traditionally taught courses.

- The depth of student learning in this course was about the same as in most traditionally taught courses.

- The depth of student learning in this course was less than in most traditionally taught courses.

- Please select the statement that best fits your sense of the breadth of student learning in this course:

- The breadth of student learning in this course was greater than in most traditionally taught courses.

- The breadth of student learning in this course was about the same as in most traditionally taught courses.

- The breadth of student learning in this course was less than in most traditionally taught courses.

- Please indicate the extent to which you agree with each statement:

| Strongly disagree | Disagree | Neither agree nor disagree | Agree | Strongly agree | |

| I was able to form personal relationships with students in this course similar to the kind of relationships that I have with students in traditionally taught courses. | |||||

| I was able to get to know students as individuals in this course. | |||||

| Students felt comfortable interacting with each other in an online environment. | |||||

| Students were able to disagree with each other in the online environment while still maintaining a sense of trust. | |||||

| Online discussions helped students to develop a sense of collaboration. | |||||

| There was a strong sense of community among the students in the course. | |||||

| Students demonstrated a clear understanding of the course structure and expectations. | |||||

| I felt comfortable guiding the class towards understanding of course topics and helping them to clarify their thinking in the online environment. | |||||

| Students were engaged and participated in productive dialogue in the online environment. | |||||

| Students were motivated to explore questions raised by the course. | |||||

| Students were comfortable using the online tools/technologies that were part of this course. |

Implementation

- What instructional approaches specific to the online environment did you find worked especially well in this course? ___________

- What instructional approaches specific to the online environment did you find disappointing in this course? _______________

- What technology tools did you find worked especially well in this course? ____________

- What technology tools did you find did not work well in this course? _________________

Overall evaluation of course

- Please select the statement that best fits your situation:

- Overall, my course went better than I expected.

- Overall, my course did not go as well as I expected.

- Overall, my course went about as well as I expected.

- Overall, some aspects of my course went better and some things did not go as well as I expected.

- Please explain your answer to the previous question_______________________

- What did you find most satisfying about teaching in an online/hybrid format? ________________

- What did you find least satisfying about teaching in an online/hybrid format? _________________

- What is your overall assessment of whether the online/hybrid format is appropriate for teaching advanced humanities content?

- Very appropriate

- somewhat appropriate

- not appropriate

- Too early to tell

- Please explain your answer to the previous question_______________________

Next iteration

- What elements or approaches will you change for the second iteration or your course? _______________

- What were the big lessons or takeaways from the first iteration of your course? __________________

- Will you encourage your colleagues to teach online as a result of this experience? [Yes/no]

- Would you like to teach another online or hybrid course as a result of this experience? [Yes/no]

- Would you encourage students to enroll in an online or hybrid course as a result of this experience? [Yes/no]

Appendix II: Student Survey

Student Survey Instrument

1. Have you taken one or more online or hybrid courses before this semester? [Yes/no]

2. Rank the three most important reasons you chose to enroll in this course:

- It fit my schedule.

- I like to interact with fellow students online.

- The course is required for my major.

- I thought it would be easier than a traditional in-person course.

- I thought I would learn more than in a traditional in-person course.

- I was curious about online or hybrid courses.

- The quality/reputation of the instructor attracted me to the course.

- Other (please explain):_________

To what extent do you agree or disagree with the following statements about the course:

| Strongly disagree | Disagree | Neither agree nor disagree | Agree | Strongly agree | |

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

| |||||

|

- How would you evaluate your experience in this course?

- Excellent

- Good

- Fair

- Poor

- How would you compare this course to a traditional in-person course?

- Much worse

- Somewhat worse

- About the same

- Somewhat better

- Much better

Please explain why you answered the way you did:________________________________

- How did this course compare to other upper level humanities courses in terms of difficulty?

- Much more difficult

- Somewhat more difficult

- About the same

- Somewhat easier

- Much easier

- Would you take another online or hybrid course?

- Definitely yes

- Probably yes

- Probably no

- Definitely no

Why or why not?__________________________

- What is your class level?

- First-year

- Sophomore

- Junior

- Senior

- Unclassified

Appendix III: Rubric for Peer Assessment

Version 1 (for courses taught in English)

| High Level Goal | Beginning: did not meet the goal | Developing: is approaching the goal | Competent:met the goal | Accomplished:exceeded the goal |

|---|---|---|---|---|

| 1. Interpret meaning as it is expressed in artistic, intellectual, or cultural works | The student a. does not appropriately use discipline-based terminology, b. does not summarize or describe major points or features of relevant works c. does not articulate similarities or differences in a range of works | The student a. attempts to use discipline-based terminology with uneven success, and demonstrates a basic understanding of that terminology. b. summarizes or describes most of the major points or features of relevant works c. articulates some similarities and differences among assigned works | The student a. uses discipline-based terminology appropriately and demonstrates a conceptual understanding of that terminology. b. summarizes or describes the major points or features of relevant works, with some reference to a contextualizing disciplinary framework c. articulates important relationships among assigned works | The student a. incorporates and demonstrates command of disciplinary concepts and terminology in sophisticated and complex ways b. identifies or describes the major points or features of relevant works in detail and depth, and articulates their significance within a contextualizing disciplinary framework c. articulates original and insightful relationships within and beyond the assigned works |

| 2. Synthesize knowledge and perspectives gained from interpretive analysis (such as the interpretations referred to in goal 1) | The student a. makes judgments without using clearly defined criteria b. takes a position (perspective, thesis/hypothesis) that is simplistic and obvious c. does not attempt to understand or engage different positions or worldviews | The student a. makes judgments using rudimentary criteria that are appropriate to the discipline b. takes a specific position (perspective, thesis/hypothesis) that acknowledges different sides of an issue c. attempts to understand and engage different positions and worldviews | The student a. makes judgments using clear criteria based on appropriate disciplinary principles b. takes a specific position (perspective, thesis/hypothesis) that takes into account the complexities of an issue and acknowledges others' points of view c. understands and engages with different positions and worldviews | The student a. makes judgments using elegantly articulated criteria based on a sophisticated and critical engagement with disciplinary principles b. takes a specific position (perspective, thesis/hypothesis) that is imaginative, taking into account the complexities of an issue and engaging others' points of view. c. engages in sophisticated dialogue with different positions and worldviews |

Version 2 (for most foreign language courses)

| High Level Goal | Beginning: did not meet the goal | Developing: is approaching the goal | Competent: met the goal | Accomplished: exceeded the goal |

|---|---|---|---|---|

| 1. Comprehension of meaning as expressed in artistic, intellectual, or cultural works | Demonstrates little or no comprehension of the main ideas and supporting details from a variety of complex texts. | Partially demonstrates comprehension of the main ideas and supporting details from a variety of complex texts. | Demonstrates for the most part comprehension of the main ideas and supporting details from a variety of complex texts. | Consistently demonstrates comprehension f main ideas and supporting details from a variety of complex texts. |

| 2. Presentation in the language of instruction of ideas as interpreted through artistic, intellectual, or cultural works | Unable to produces narratives and descriptions in different time frames on familiar and some unfamiliar topics. No evidence of the ability to provide a well supported argument Produces only sentences, series of sentences and some connected sentences. | Only partially able to produce narratives and descriptions in different time frames on familiar and some unfamiliar topics. Little evidence of the ability to provide a well supported argument. May occasionally produce coherent and cohesive paragraphs, but usually reverts to sentences, series of sentences and some connected sentences. | Produces narratives and descriptions on the course topic in all major time frames most of the time. Can formulate an argument, but may lack detailed evidentiary support. Writes coherent and cohesive paragraphs most of the time. May occasionally revert to sentences and strings of sentences. | Consistently produces narratives and descriptions on course topic in all major time frames. Shows emerging evidence of the ability to provide a well supported argument, including detailed evidence in support of a point of view. Writes texts with coherent and cohesive paragraphs |

- All quotes are extracted from instructor and student surveys conducted at the end of the semester. ↑

- A couple students commented that they did not think the online/hybrid format was appropriate for foreign languages, but there were similarly negative comments in English language courses. Given the small sample size, we do not have enough data to draw strong conclusions on this topic. ↑

- The standard deviation of scores by institution is .44, meaning that over two thirds of average scores by institution were within .44 points above or below the mean of 2.61. ↑

- Conference call held June 23. ↑

- https://coi.athabascau.ca/coi-model/coi-survey/ ↑

- Defined as the extent to which they were able to construct meaning through sustained communication. ↑

Attribution/NonCommercial 4.0 International License. To view a copy of the license, please see http://creativecommons.org/licenses/by-nc/4.0/.