Can Online Learning Improve College Math Readiness?

Randomized Trials Using Pearson’s MyFoundationsLab in Summer Bridge Programs

Far too many students in the United States start their postsecondary education without being able to demonstrate the skills and knowledge deemed necessary to succeed in college-level math. Colleges and universities have traditionally dealt with this problem by placing students in full-semester developmental courses for which they must pay full tuition but do not receive college credit. It has become clear, however, that this approach has serious drawbacks, as students who start out in remediation are far less likely to attain a degree. Developmental courses are increasingly seen as a barrier rather than a bridge to college success. A great deal of experimentation is underway to find solutions that are more effective and less costly for students.

Summer bridge programs are a popular approach to helping students close gaps before they start their first year of college. These are typically intensive, 4 to 5 week interventions that aim to address multiple areas of deficiency, including math, reading and writing, and study skills. Research suggests that summer bridge programs can help students start college on stronger footing, at least in the short term, but that benefits fade by the end of two years without additional support. Summer programs are not a practical solution for everyone—they are costly for the institution to provide and many students are not able or willing to spend a large part of the summer between high school and college in intensive, campus-based programs. In fact, students who most need remediation may also be those who most need to work during the summer to pay for college.

Ithaka S+R worked with five campuses in the University System of Maryland to explore whether an adaptive learning product provided by Pearson could be used to offer more accessible, lower-cost summer programs. The Pearson product, MyFoundationsLab, enhanced with Knewton’s adaptive learning engine, aims to personalize study paths for students. Adaptive technologies enable students to identify specific skill gaps and work independently online to address those gaps with interactive instructional materials and assessments. We tested the hypothesis that technology could replace some or all of the traditional instructor-led class time required to help students improve their college readiness while substantially reducing the costs of the intervention.

The study took place in two iterations: first, we conducted pilots using MyFoundationsLab in both blended and online-only formats in order to explore whether this technology could help improve outcomes and/or lower costs. Second, we carried out a field study in which we tested the effects of an online-only program involving access to MyFoundationsLab along with prescribed messaging from facilitators encouraging students to participate and engage with the system. Given findings from earlier studies of summer bridge programs, we did not expect to see dramatic gains in academic performance; the question was whether this intervention could “move the needle” in helping more students enroll and succeed in college-level math.

For the field experiment, incoming first-year students at three institutions in Maryland were randomly assigned to treatment groups and control groups. Treatment group students were invited to participate in the program and provided with free access to MyFoundationsLab, while control group students either received no communications or were provided access to a website with static materials. Both groups of students were invited to retake their institution’s placement test and provided with assistance in switching into different math courses if their scores improved enough. In terms of outcomes, we looked at whether students opted to retake placement exams, whether they improved their scores, and how they performed in math courses during the first year of college.

We found that students responded positively to the offer of the program, with between 40% and 70% of students opting to participate across the three sites. Most of these students logged into the online program at least once. At two of the three institutions, students who had access to MyFoundationsLab were significantly more likely to retake the placement test and to improve their scores. We did not, however, see improvements in subsequent academic performance for students who received the intervention as compared to those who did not. Grades in math-related courses and the share of students who took and passed math courses among treatment group students were not statistically different from those of students in the control groups.

It may be that students engaged with the online system enough to achieve a narrowly defined goal—improved test scores—but not sufficiently to generate longer term and more meaningful benefits. We also cannot be sure how much of the improvement in scores was due to actual learning versus just retaking the test. Some data suggest that retaking the test had a positive effect, but that does not mean that the treatment had no impact on knowledge of math.

The online-only program was inexpensive to offer, and most of the cost in normal circumstances would be for software licenses (MyFoundationsLab costs $36.30 for ten week access per student). This is dramatically less than the average of over $1,300 per student for a campus-based program cited in one study,[1] but the return in terms of student outcomes also appears to be lower. It seems likely that an optimal scenario involves a mix of face-to-face instruction and online work. Indeed, the pilot tests suggest that online technology can be used to reduce instructor cost per student for blended summer programs, but more research is needed to determine conclusively the effects on student learning when some portion of instructor-led class time is replaced with independent online work.

Given what we know now, however, these findings underscore the need for caution in relying on online-only solutions to close the college readiness gap for struggling students.

Our conclusion is that the MyFoundationsLab adaptive learning software can be used to offer a low-cost intervention that leads to improved placement test scores, but not necessarily to improved performance in math courses—arguably the outcome that really matters. It is possible that different software or more effective messaging could spur greater student engagement with the online system in this type of program. Given what we know now, however, these findings underscore the need for caution in relying on online-only solutions to close the college readiness gap for struggling students.

I. Introduction

Every year postsecondary institutions in the United States enroll more than 1.5 million students who are identified as not prepared to succeed in college-level mathematics.[2] This problem is particularly acute in community colleges and open enrollment four-year institutions, but even selective research universities take in many students whose math skills are not on track for their desired courses of study.[3] The standard approach for serving students who are deemed “not ready” for college level math has been to place them in remedial or developmental courses, typically a sequence of full semester courses starting as low as basic math (whole numbers, fractions, decimals, and so on). These courses do not count towards college degrees but still incur tuition and fees.

It is evident that this approach has substantial drawbacks. At the national level, students who start out in remedial courses have poor graduation prospects—only about a third of these students in four-year colleges finish in six years, and less than ten percent of community college students who start in remedial courses attain a degree in three years.[4] Moreover, the costs are substantial: a study conducted in 2009 estimated national direct costs of remediation at $3.6 billion.[5]

Educators, administrators, researchers, and policy makers are pursuing a variety of approaches to address this problem. Some question whether students, at least those with test scores close to cutoff points, might pass college level math courses if given the chance. A study conducted by the Community College Research Center at Columbia University found that a significant portion of students who placed into developmental courses could have succeeded in college-level ones, and urged institutions to consider a broader set of indicators when making placement decisions.[6] Some institutions have compressed developmental course sequences or eliminated them altogether, replacing them with co-curricular supports, such as tutoring services.[7] State legislators are also getting involved: North Carolina four-year institutions are banned from providing developmental courses, and in Florida all high school graduates are entitled to take college level courses if they so choose.

Summer bridge programs are one long-standing approach to improving college readiness.[8] These are typically intensive, campus-based programs offered during the summer between high school and college. They can aim to improve multiple dimensions of college readiness, including study habits and life skills in addition to math and literacy skills.[9] Some evaluations of summer bridge programs have found benefits for students from these intensive programs. For example, a study at the University of Arizona found positive effects on students’ academic skills, self-efficacy, and first semester grades.[10] The most rigorous study of summer bridge programs to date compared outcomes over a two-year period for students who participated in these programs with those of students who did not. This study involved six community colleges and two open enrollment institutions in Texas and used random assignment. The researchers found that the programs positively impacted students’ pass rates in college-level math and writing, as well as completion of math and writing courses, for the first year and a half. They did not, however, find any effects on the number of credits attempted or earned, or persistence rates over a two-year period. The authors surmised that it is not realistic to expect sustained long-term impacts from a short-term, intensive intervention.[11]

Summer bridge programs have other drawbacks. Not all students are able and/or willing to commit the necessary time during the summer after senior year—typically three to six hours per day for four to five weeks.[12] Moreover, these programs are expensive to provide, particularly if they are residential. The Texas study found that average costs per student to the institution ranged from $835 to $2,349, with an average of $1,319.[13] (This includes a stipend of up to $400 provided to students.) Given the high costs of summer bridge programs, as well as remedial courses, institutions continue to look for more cost-effective ways to prepare students for college-level math.

Potential of Emerging Technologies

There are many instances of improved outcomes associated with implementation of learning technologies, but it has proven difficult to replicate these benefits at a large scale.

The potential for online technologies to improve learning outcomes and lower costs in higher education is a topic of great interest. There are many instances of improved outcomes associated with implementation of learning technologies, but it has proven difficult to replicate these benefits at a large scale. From a research perspective, it has also been hard to tease out the effects of technology from other implementation factors, such as quality of instruction and student characteristics.[14] A 2011 meta-analysis of research on online learning found no significant difference in outcomes between online and face-to-face instruction, and more recent experiments have also failed to detect significant improvements (or decrements) associated with adoption of technology in hybrid formats (i.e. courses that combine both face-to-face and online instruction).[15]

Still, optimism about the potential of online learning technologies persists, fueled in part by the enormous effort and investment focused on developing more interactive and engaging educational technologies.[16] In the first six months of 2015, investors poured over $2.5 billion into “instructional products directly involved in the learning process.”[17] Encouraging findings in large scale studies in the K-12 sector—where it is more feasible to randomly assign schools to different conditions—also provide reason to be hopeful that gains could be realized more systematically in postsecondary education as technologies and implementation methods advance.[18]

Much of this enthusiasm has focused on the development of adaptive learning technology.[19] These systems aim to capture information about individual students as they interact with the software and use this information to create customized learning paths targeted to their needs. This can mean identifying specific areas of weakness and directing students to work on those skills, changing the context in which concepts are embedded to reflect the interests of the learner, or modifying the pace of instruction to suit individual needs. The hope is that adaptive technology will enable students to increase their knowledge and skills more effectively and efficiently than traditional instruction (at least for some types of content), and that it will prove more engaging than earlier technologies. While much unsubstantiated hype is bandied about by developers, there is still good reason to expect that the tools available to faculty and institutions will continue to improve. Moreover, students appreciate the flexibility and convenience online learning affords, and we expect use of these technologies will only grow.

In terms of cost impacts, studies have found that online platforms can be used to substantially reduce the amount of class time in hybrid courses without harming student outcomes, but there is little conclusive evidence that these time savings translate into cost savings for institutions.[20] The many fixed costs involved with traditional instruction, such as classroom space and tenured faculty salaries, make such calculations extremely difficult. Moreover, given the many demands on faculty time, time savings from one activity can very easily be absorbed elsewhere without yielding reductions in staff costs.

Summer programs offer an appealing context for examining both learning impacts and the potential for cost reductions associated with emerging technologies. Since these programs are outside the core curriculum, their coordinators tend to have more flexibility in terms of what content is covered and how instruction is delivered. Since they typically rely on short-term contracts with instructors, a reduction in staff time demands can translate into direct cost savings.

Thus we set out to examine alternative and less costly approaches to offer summer programs using emerging technologies. Given the recent growth in adaptive learning, we wished to test whether one of these platforms could be used to offer summer programs that are more accessible, more effective, and/or less expensive.

Study of Online Learning in Summer Bridge Programs

In 2012, Ithaka S+R partnered with the University System of Maryland (USM) to test the broad hypothesis that online learning technologies can be used to improve learning outcomes and/or reduce costs for students enrolled in traditional universities. This initiative was funded by a grant from the Bill and Melinda Gates Foundation. During 2013-2014, Ithaka S+R and seven USM campuses conducted a series of experiments using Massively Open Online Courses (MOOCs) from Coursera and courses from the Open Learning Initiative at Carnegie Mellon in hybrid campus-based courses. The findings from those tests were reported in mid-2014.[21]

As part of the same initiative, Ithaka S+R worked with five institutions in Maryland to conduct a series of randomized controlled trials using MyFoundationsLab (MFL), an online learning product provided by Pearson, in summer bridge programs. The purpose of these tests was to explore the potential for emerging technology to help strengthen incoming first-year students’ mathematics preparation in cost-effective ways. Specifically, we sought answers to the following questions: what is the impact on student outcomes associated with use of an adaptive learning product as part of various types of summer bridge programs? Can MyFoundationsLab be used to make these programs more accessible and/or affordable? And finally, would we be able to detect any short-term or sustained benefits from interventions that are delivered entirely online?

Five institutions were involved over the three-year study: the University of Maryland, Baltimore County (UMBC), Towson University, Bowie State University, Coppin State University, and the University of Baltimore. Only one institution participated in both the pilot and the field test. In two iterations of tests over two summers, we randomly assigned students to treatment and control sections. Institutions participated voluntarily, largely because the research dovetailed with their needs and desire to try out new approaches to improve math readiness of incoming students.

In a set of pilot tests with both online and campus-based programs, we saw hints of potential benefits and decrements associated with use of the technology. On average, students who participated in summer programs made significant gains in math test scores, but the findings were inconclusive regarding the relative gains in traditionally taught versus blended instruction. At one of the sites, use of the technology in place of a portion of instructor-led class time was associated with lower learning gains, but made it possible to enroll more students with the same number of instructors.

In the field test, we focused on investigating whether the provision of an online-only summer program could produce gains in student placement test scores and performance in first year of college math. This intervention consisted of an invitation to participate in the program, free access to MyFoundationsLab, and a series of email messages from program facilitators encouraging students to engage with the program.

Given the findings of earlier research on campus-based summer bridge programs, we did not expect to find dramatic gains associated with an entirely online intervention focused only on math skills. We did, however, see value in testing whether a relatively low-cost program, made possible by emerging technology, could “move the needle” in terms of students’ math preparation and in obtaining a clearer understanding of the value of the online tools. Furthermore, previous studies had focused on community colleges and open enrollment four-year institutions, and we thought it would be valuable to see how students attending more selective institutions might benefit from technology-enhanced summer programs.

This finding suggests that an online-only intervention using MyFoundationsLab can help raise student placement test scores, but will likely have a limited longer-term impact on academic performance.

Our overall finding was that students who received the intervention were more likely to retake the placement test and improve their scores. We did not, however, find evidence that these improved scores translated into obtaining more math credits or improved grades in math-related courses during the first year of college. This finding suggests that an online-only intervention using MyFoundationsLab can help raise student placement test scores, but will likely have a limited longer-term impact on academic performance.

II. Research Design

The study used experimental designs in which students were randomly assigned to groups with different levels of access and exposure to MyFoundationsLab. These designs enabled us to isolate the effects of the technology from selection effects, such as students’ level of motivation to learn math or work independently online. Each participating institution had specific requirements for the format of their program and research design, resulting in some important differences across sites.

In the summer of 2013, we conducted pilot tests using MFL in three summer bridge programs focused primarily on math skills. Two of the pilot sites, University of Baltimore and Coppin State, had existing residential summer bridge programs and were primarily interested in finding ways to improve learning outcomes and reduce the costs of these programs. At these two campuses we examined the outcomes of students who were randomly assigned to sections with varying amounts of time allotted to facilitated computer lab work versus instructor-led class time. We also collected information on the costs of providing these programs. These pilots are described in more detail in Appendix A.

The third institution, UMBC, piloted a new online-only program intended to help students place into pre-calculus. This institution is a selective research university known for its science, technology, engineering and math (STEM) programs. Historically, students who are STEM majors and who place in college algebra are at risk for completing their intended degrees in STEM fields. Administrators and faculty hoped that providing access to an online program for improving math skills would help more students place into pre-calculus. The selected product, MyFoundationsLab, was accompanied by a series of communications from facilitators who tracked students’ progress and sent email “nudges” two to three times per week encouraging them to engage with the online product.

For the pilots, outcomes of interest included students’ decisions whether to retake a placement test when given the option and the differences in scores for those who did. Coppin State also tracked student performance on embedded assessments in Pearson. We found that the differences in implementation and outcomes across the three sites outweighed the effect of using MFL compared to traditional instruction. Looking at placement test scores for students who opted to retake the test at the end of the summer, students in hybrid sections did better on one campus and worse on the other.

For the field test, we recruited two new study sites that were willing to implement the program in a format similar to that piloted at UMBC. Some adjustments were made to the format, such as timing of the program and recommended email nudges, which were refined based on research on the kinds of messages that have been shown to influence student behavior.[22] For example, facilitators sent messages recognizing positive effort and informing students when they put in less effort than their peers; examples of these messages are available in Appendix B. Programs lasted from four to six weeks.

Outcomes of interest again included whether or not students chose to retake the placement test and their scores on the first and, if applicable, second test administrations. In addition, we observed whether students who were invited to participate in the program enrolled in math-related courses during their first year in college, their course grades, and whether they passed those courses. We also examined overall GPA to see whether there were spillover effects on other types of courses.

While the field tests were implemented with greater uniformity across the three sites, there were still some important distinctions reflecting local conditions and needs. Towson University, the state’s largest comprehensive university, offers masters and applied doctoral degree programs and has the Carnegie classification of Masters (Comprehensive) University I. Students who had placed into developmental math and into some 100 level courses were considered eligible for participation. Many of these students needed to take pre-calculus and/or statistics for their degrees, so they had an incentive to improve placement scores to place directly into those subjects. Eligible students were randomly assigned to treatment and control groups without consent, though students were notified of the study and given the opportunity to opt out (approximately one percent of eligible students chose to do so).[23] Treatment group students received a letter describing the program and encouraging them to participate (a sample letter is provided in Appendix C). The program consisted of free access to MyFoundationsLab and nudges from a program facilitator encouraging students to engage with the product. Both treatment and control group students were notified of the option to retake the placement test at the end of the summer, count only the higher score, and get help from an advisor in changing their course schedules if they placed into a higher level of math. Other than this notification, control group students did not receive additional communications or supports. Both treatment and control group students were asked to respond to an online survey after the program ended.

Table 1: Overview of Towson Study Design

| Eligibility | Randomization procedure | Control condition | Student data collected | Study participants | |

|---|---|---|---|---|---|

| Towson | Students who placed into developmental math and into some 100 level courses | Consent not required for random assignment; students could opt out | Invited to retake placement test, use highest score, get advisor help changing math course enrollment | MAA Maplesoft scores Transcript data Usage logs Post survey | 697 |

Bowie State is an HBCU with the Carnegie classification Master’s College and University I. Administrators chose to focus the intervention on students who placed into developmental math and below pre-calculus. Those who had already signed up to attend an intensive in-person summer bridge program were excluded based on the belief that the latter would be more beneficial. As at Towson, all eligible students were randomly assigned to treatment and control groups, and only treatment group students received communications from the program facilitator and free access to MyFoundationsLab. Both groups of students were invited to retake the placement test and get help switching into a higher level math course.

Table 2: Overview of Bowie State Study Design

| Eligibility | Randomization procedure | Control condition | Student data collected | Study participants | |

|---|---|---|---|---|---|

| Bowie State | Students who placed into developmental math but below pre-calculus; excluded students enrolled in on-campus program | Consent not required for random assignment | Invited to retake placement test, use highest score, get advisor help changing math course | Accuplacer test scores Transcript data Usage logs | 155 |

As noted above, UMBC’s primary focus was on students who placed into college algebra. Some students who placed into remedial math and pre-calculus were also included. Unlike the other two institutions, UMBC’s institutional review board required that students give consent before random assignment. Eligible students were first invited to participate in the study, and then those who opted to participate were randomly assigned to treatment and control groups. This difference in procedures is important, as only 29% of invited students chose to participate in the study, reducing the study population from approximately 400 eligible students to 116. Students in the treatment group, as at other sites, had access to MFL and received frequent nudges from the facilitator. Students in the control group were given access to a Blackboard website with resources available through the campus Learning Resource Center and received fewer communications.

Table 3: Overview of UMBC Study Design

| Eligibility | Randomization procedure | Control condition | Student data collected | Study participants | |

|---|---|---|---|---|---|

| UMBC | Students who placed into college algebra, as well as some students who placed into remedial math and pre-calculus | Consent required before random assignment | Invited to participate in program, access to Blackboard website with resources from Learning Center; invited to retake placement test, use highest score, get advisor help changing math course | Locally developed test Transcript data Usage logs | 116 |

Table 4 summarizes components of the intervention provided to the treatment and control groups at each institution and demonstrates the consistency in intervention design for all three institutions. It also shows that UMBC had smaller differences in format between the treatment and control groups than the other two sites.

Table 4: Intervention Components

| Intervention components | Towson | Bowie State | UMBC | |||

|---|---|---|---|---|---|---|

| Treatment | Control | Treatment | Control | Treatment | Control | |

| Invitation to participate in summer program | ✓ | ✓ | ✓ | ✓ | ||

| Option to attend in-person orientation | ✓ (pilot year) | ✓ (pilot year) | ||||

| Access to MFL | ✓ | ✓ | ✓ | |||

| Access to computer lab on campus | ✓ | ✓ | ||||

| Access to static online resources | ✓ | |||||

| Program facilitator | ✓ | ✓ | ✓ | ✓ | ||

| Option to retake placement test | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Assistance switching to different math courses if placement score improves | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

Pearson MyFoundationsLab

Each of the five campuses used Pearson’s MyFoundationsLab (MFL), a mastery-based system for assessing and remediating college readiness skills with personalized learning plans and interactive learning activities. This product was selected through discussions with the five participating institutions, several of which had prior experience with MyMathLab (MML), another Pearson product. Pearson representatives expressed a desire to participate in this research, provided free licenses for the study, and supported two iterations of implementations and data collections.

We were also interested in testing MFL because it is instrumented with the Knewton adaptive learning engine. Knewton describes itself as the “world’s leading adaptive learning technology provider” and has raised over $147 million from 19 investors since its launch in 2008.[24] Knewton’s technology is intended to diagnose students’ skill gaps at a granular level and prescribe specific activities to address individual gaps in conceptual understanding or skills. Investigating the impacts associated with adaptive technologies was a high priority given the lack of such evidence available when this study launched in 2013.[25]

MyFoundationsLab and MyMathLab have several differences. One is content coverage: MFL includes reading, writing, and study skills in addition to mathematics. Four of the campuses participating in the study used only the math components, while one of the pilot sites (Coppin State) also used reading and writing components. MFL covers whole numbers through trigonometry. MML, on the other hand, addresses only math and covers topics through multivariable calculus. Both products are designed to be used in a wide variety of settings, ranging from summer bridge camps and boot camps, to adult basic education, to placement prep courses, and other programs aimed at accelerating students through developmental curricula. MML is also widely used in college-level courses. According to Pearson, the strongest implementations of these two products are in blended or lab-based environments within educator-managed programs or courses.

MFL is designed to enable students to work independently and at their own pace. Pearson representatives describe the product as having two layers of adaptivity. The first layer begins with a diagnostic test called PathBuilder, which assesses students’ skills and generates a personalized “learning path” for each student. The individual “learning path” consists of modules that are identified as areas of weakness. At the beginning of each module students are presented with a “skills check” which identifies specific topics a student needs to work on within that module.

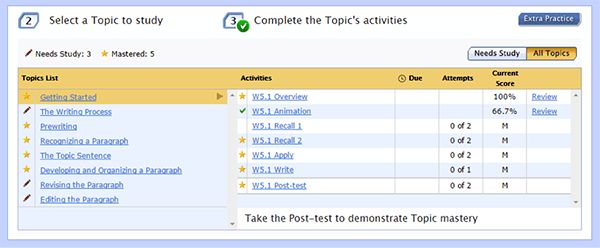

Figure 1: Student View of Module within MFL

Instructors can designate which modules and topics should be included in a program, and the system provides 20 modules and 243 topics in total. Topics consist of instructional resources (tutorials, videos, and worked examples), practice problems, quizzes, and post-tests. Two of the three field test sites selected 16 modules for their programs, and one selected 10 modules.

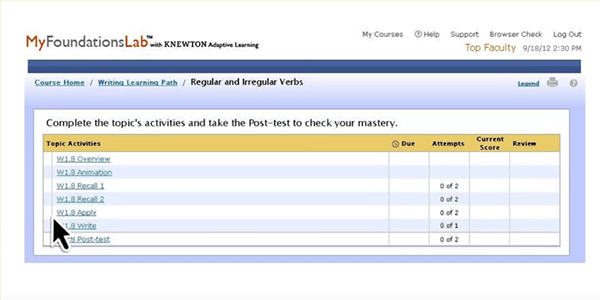

Figure 2: Student View of Topic within MFL

The program is self-paced, meaning that students can work through topics and assessments on their own schedules. Students can monitor their own progress through the modules. The system also provides an instructor dashboard providing information about students’ scores on assessments in the system, their progress in the course by topic and unit, and engagement metrics such as last login time and date.

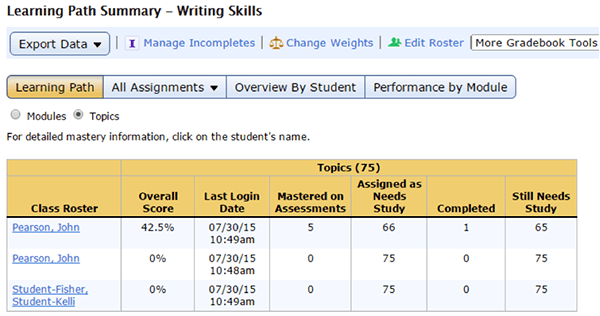

Figure 3: Instructor View of Student Progress within MFL

The second layer of adaptivity is provided by the Knewton adaptive learning engine, which provides recommendations for what each student should review or work on next. It functions within the set of modules selected for a course or program, and works at the topic level. In other words, students are prompted to work on an “optimized” sequence of topics. Instructors can choose whether or not to “turn on” the recommendation engine, and we were informed that a large majority of instructors do choose to turn it on.

Data Collected

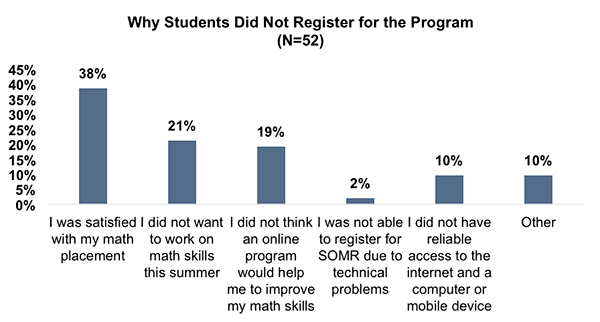

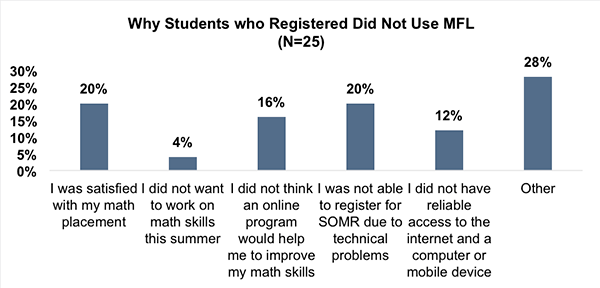

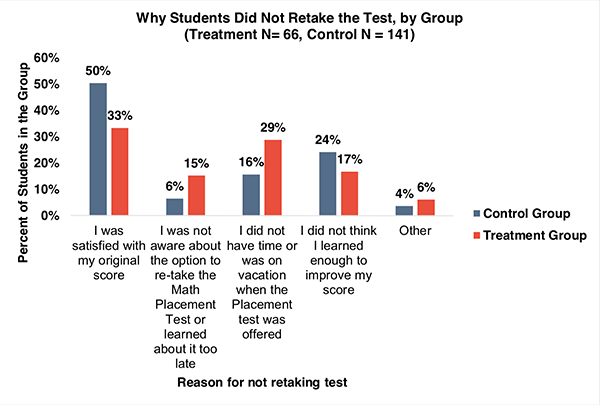

Data collected for the three field test sites included pre-test scores, post-test scores, usage logs from Pearson, transcript data for students’ first year of college, and data on student characteristics. Each of these institutions used their placement tests as the pre- and post-tests: MAA Maplesoft (Towson), Accuplacer (Bowie State), and a locally developed exam (UMBC). We used student transcripts to determine whether students enrolled in college-level math-related courses, their grades and pass rates in math-related courses, and their overall GPAs. At Towson we conducted a survey of all students in the control and treatment groups to collect additional data about perceptions of the program, reasons for participating or not participating in the program and retaking or not retaking the placement test, and satisfaction with various aspects of the program. Survey results are provided in Appendix E. Finally, we interviewed program facilitators to qualitatively assess the success of the programs.

III. Findings

We will first look at the findings for each of the three sites and then summarize the results. We start with Towson given that we had by far the largest study population at this site.

Towson

At Towson, we found considerable interest among students in participating in the program. The total study population was 697 students, with 352 randomly assigned to the treatment group and 345 to the control group. 56% of students who were invited to participate in the intervention chose to do so, and of those, 83% logged into MFL at least once, indicating that 47% of invited students logged on at some point. Students with lower placement scores were more likely to participate in the program, although the majority of eligible students had relatively high placement scores. (Table 1 in Appendix E provides breakdown of participation by pre-test score.)

This level of engagement translated into significantly higher final placement test scores, with 23% of treatment section students opting to retake the placement test, compared to 10% for the control group. We also saw an average improvement of 1.3 out of 25 possible points on the exam, compared to 0.5 for control group students. (Students who did not retake the placement test or who scored lower on the retake are assigned a score change of zero).

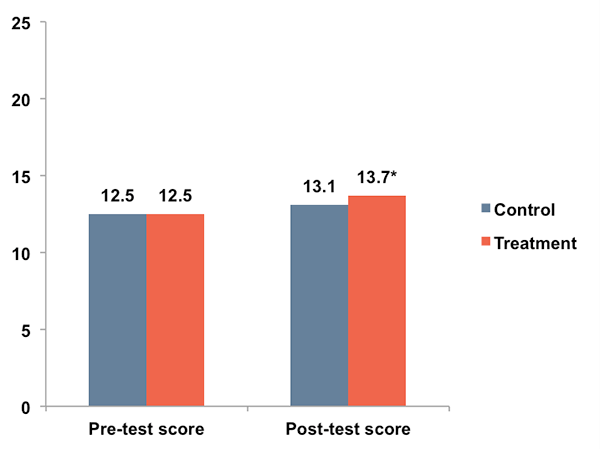

Figure 4: Towson Pre/Post-Test Scores for Control vs. Treatment Groups

*Indicates treatment/control difference is statistically significant at p<.10 level.

The difference in post-intervention placement test scores at Towson is statistically significant. However, the larger increase in scores among the treatment group may have been partly the result of the higher retake rate. If we look only at students who retook the test and include both score increases and decreases, we find an average score gain of 5 points in the treatment group and 4.5 points in the control group.

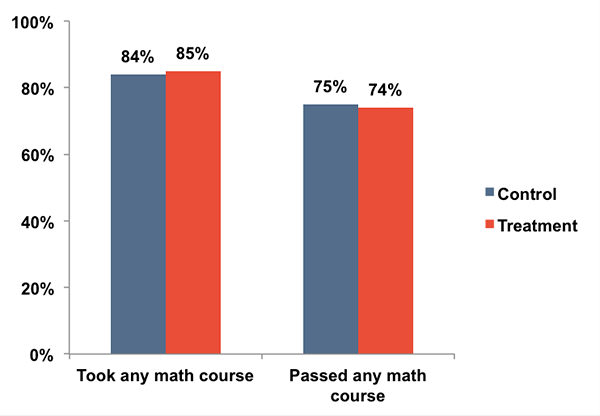

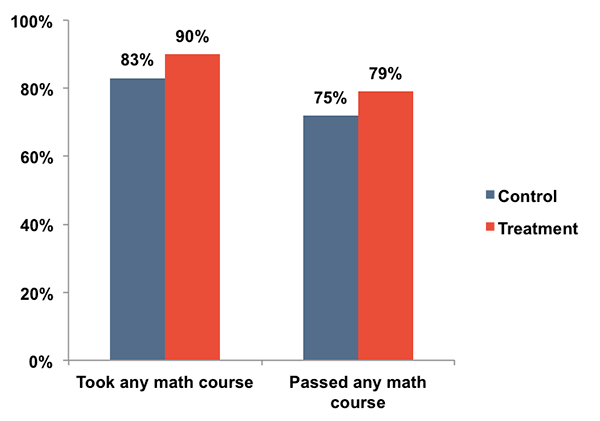

This is consistent with the lack of impacts on first-year course performance we find when comparing percentages of students that took any math course and passed any math course during the first year of college.

Figure 5: Towson Math Performance for Treatment vs. Control Groups

We find a similar lack of differences when we look at GPAs in all graded courses and in math courses during this period. In sum, we can be confident that being offered access to MFL increased the likelihood that students retook the placement test and increased their scores, but it did not necessarily increase their knowledge of math.

Bowie State

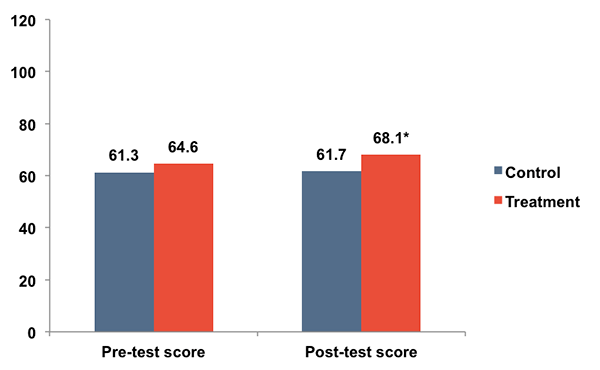

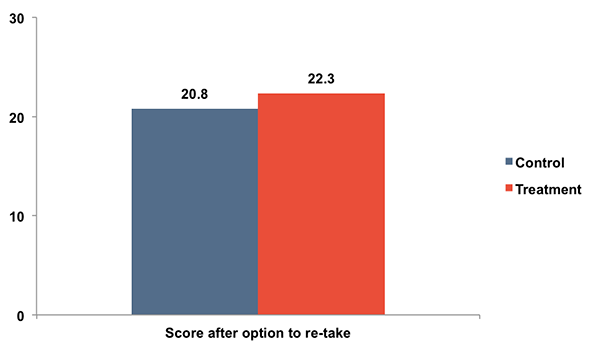

Here 155 students were involved in the study: 90 in the treatment group and 65 in the control group.[26] Of those who were invited to participate, 49% chose to do so, and 82% of these students logged on at least once. As with Towson, we saw that students who were invited to participate in the program were more likely to retake placement tests and to improve their scores. 30% of treatment group students retook the placement test, compared to 6% of control group students, resulting in significantly higher gains in placement test scores. Moreover, of students who did retake the placement test, those who had access to MFL did much better: these students raised their average scores by 8.2 points, while the very small number of students in the control group who retook the placement test actually got lower scores by 5.3 points, on average (see Appendix D Table 7).

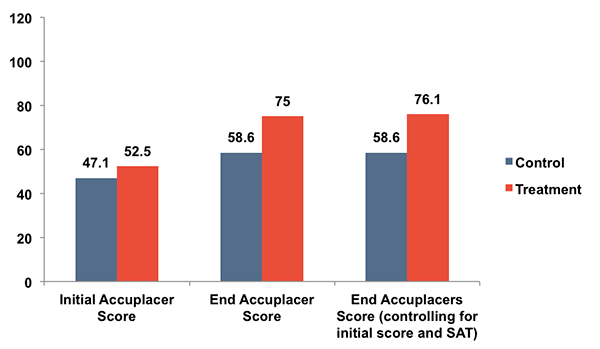

Figure 6: Pre/Post-Test Scores for Bowie State Treatment vs. Control Groups

*Indicates treatment/control difference is statistically significant at p<.10 level.

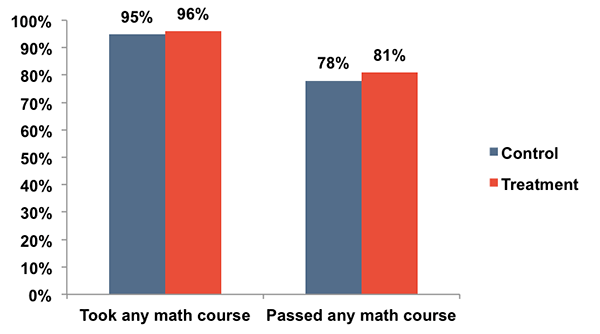

As with Towson, students who received the intervention did not have significantly better academic outcomes in math courses. Students in the treatment group had slightly higher average pass rates and math enrollment, but these differences were not statistically significant.

Figure 7: Bowie State Math Performance for Treatment vs. Control Groups

UMBC

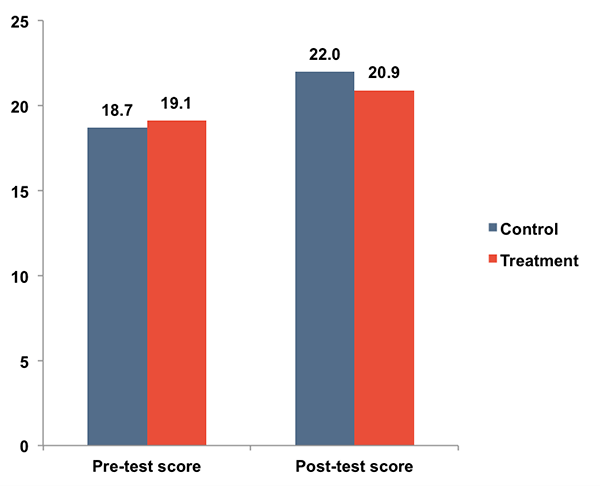

At UMBC students were invited to participate in the study before they were assigned to treatment or comparison groups. Out of 543 eligible students, 116 consented to participate in the study (21%). These 116 students were then randomly divided into treatment and control groups. All participants were enrolled in a zero-credit PRAC 101 course, which included two different Blackboard sites that were used for communication by the instructors. Out of 58 students in the treatment group, 44 logged into MFL at least once (76%). Gaining access to MFL did not, however, increase the likelihood that students retook the placement test. The share of treatment group students who opted to retake the placement test is significantly lower than that of the control group (36% compared to 62%). We saw a similar pattern in the pilot year, and though the sample size is small this raises the possibility that this was not just a random occurrence. Moreover, we found that the average score gain for students who had access to MFL was smaller than for those who did not, though this difference is not statistically significant.

Figure 8: UMBC Pre/Post-Test Scores for Treatment vs. Control Groups

*Indicates treatment/control difference is statistically significant at p<.10 level.

One possible explanation for the difference in findings between UMBC and the other two institutions is the randomization procedure. At Towson and Bowie State, students were randomly assigned to treatment or control conditions without first obtaining their consent (though students at Towson could opt out of the study). As a result, we were able to achieve a larger study population and include comparison groups that were more representative of non-participants in the study. At UMBC, on the other hand, students had to opt into the study before they could be assigned to treatment or control groups, and students were offered the opportunity to retake the placement test and keep the best score as an incentive for participation. It is therefore reasonable to hypothesize that students who opted into the study were more motivated than those who did not to improve their math skills or placement score, and thus are not representative of non-participants. There is evidence of this bias in data showing how many students actually logged into MFL—76% of the treatment cohort at UMBC compared with only 47% at Towson.

This hypothesis does not explain why control group students were much more likely to retake the placement test at UMBC and made slightly larger gains. It is possible that these students found alternate (and more effective) ways to prepare for the exam. It is also possible that students score higher on a retake even when they do not prepare for it, and thus the larger retake rate among the control group led to a larger score increase (remembering that the score gain for students who do not retake the placement test is recorded as zero, and thus these students may bring down the mean score gain for their group). If students who did not retake the placement test are removed from the analysis, we find that students in both treatment and control groups increased their scores by about five points (similar to what we found at Towson). This suggests that MFL neither hurt nor helped students in the treatment group with respect to their math skills.

Figure 9: UMBC Math Performance for Treatment vs. Control Groups

At UMBC, a higher percentage of treatment group students took and passed math courses, but these differences were not statistically significant. We did not see a difference in grades for math-related courses or overall.

Summary of Findings

Overall, students responded positively to the offer of the program, with roughly half the students who had access to MFL across the three sites logging into the system at least once.[27] At two of the field test sites, we found that those who received the intervention were more likely to retake the placement test and to raise their placement test scores, thus achieving significantly improved test scores. We cannot be sure, however, how much of the improvement in scores is due to actual learning as compared to just retaking the test. In addition, we did not see evidence of performance gains in first year math-related courses. The notable exception is UMBC, whose treatment group students were significantly less likely to retake the placement test. We believe this may be at least in part due to the randomization procedure, as students in the control group had opted to participate in the study and thus may not have been representative of the population at large.

Is it possible that students who received the treatment enrolled in more advanced math courses, but that this gain did not appear in other outcome measures? This seems possible at Towson given that many students had originally placed into college level math. Our analysis of transcript data did not, however, find evidence that this was the case.

Usage Data Analysis

Usage data from Pearson suggests that many students engaged with the program and made progress within the system in terms of mastering modules or topics.

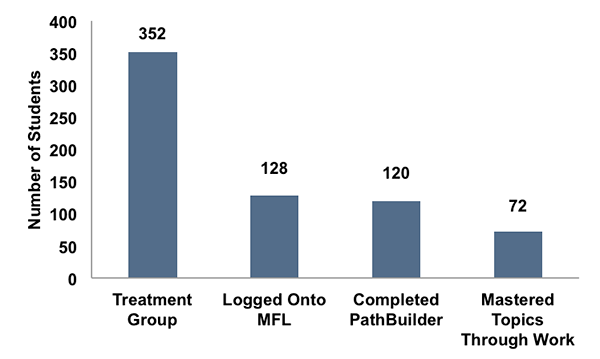

Figure 10 shows that out of 352 students at Towson who had access to Pearson, 128 logged into MFL at least once and 94% of those completed PathBuilder, the initial diagnostic assessment. Of those that completed PathBuilder, 60% mastered at least one topic through working in the system.

Figure 10: Student Engagement in MFL at Towson

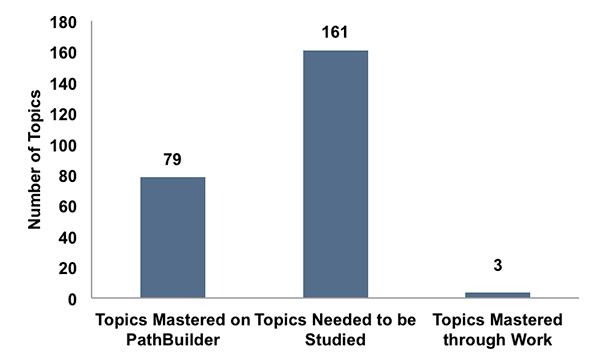

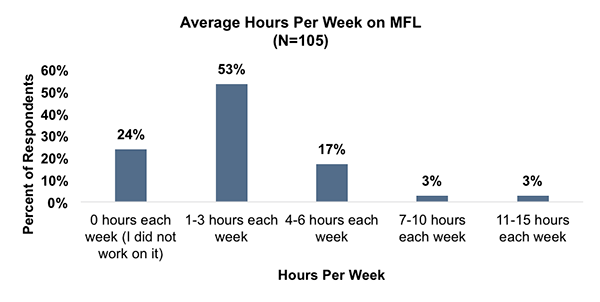

On the other hand, Figure 11 shows that students typically made little progress in terms of mastering topics within MFL. On average, in the initial assessment students demonstrated mastery on 79 topics and needed to work on 161. Students only mastered 3 topics through work, on average. If we exclude students who did not master any topics at all, the average climbs to 7.7 topics mastered through work. The usage reports that we obtained do not shed light on why so few students recorded progress within the system. One instructor reported complaints from students about the number of assessments (i.e. PathBuilder, skills checks, post-tests within each topic). It is possible that the large volume of topics and assessments was overwhelming for students working independently. It is also possible that students worked in the instructional materials and assignments for topics identified as “needing study,” but did not complete the post-tests and thus did not record progress.

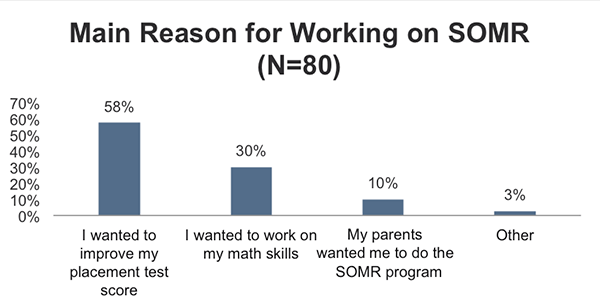

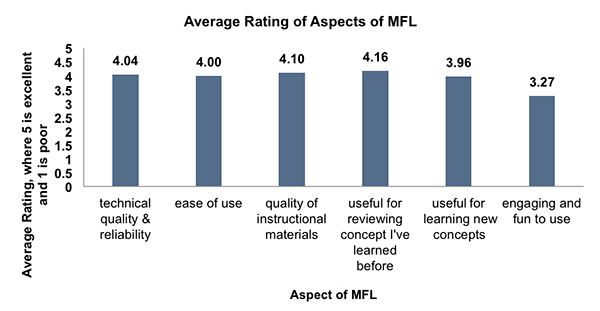

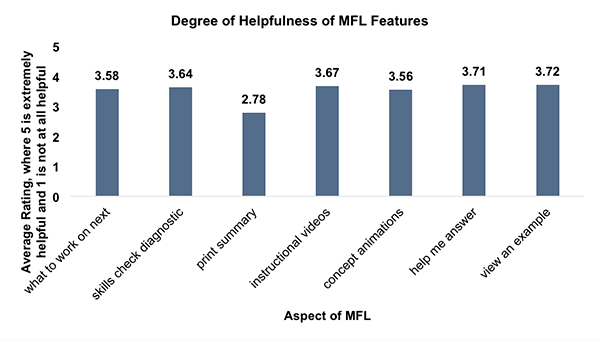

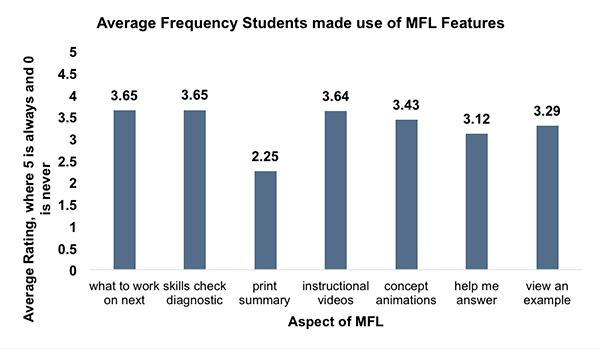

In a survey at Towson (reported in Appendix E), students gave fairly high ratings to MFL on most dimensions, with scores ranging from 4.0 to 4.2 out of 5 on criteria such as technical quality, ease of use, and quality of instructional materials. They gave a lower rating of 3.3 for “engaging and fun to use.” Ratings of various features of the product were fairly consistent at 3.6-3.7 on a six-point scale, including the skills check diagnostic. Thus, survey responses do not shed light on whether the number of assessments constituted a barrier to progress for many students. Moreover, the survey results should be viewed with caution since only 45% of treatment group students responded.

Figure 11: Student Mastery of Topics within MyFoundationsLab (Total Possible = 243)

Analysis of usage data from Pearson indicates that certain behaviors in MyFoundationsLab are associated with placement score gains. We found positive associations between student gains on placement tests and whether they logged into MFL, completed the PathBuilder diagnostic assessment, and completed modules. Analysis of MFL usage and performance in math courses did not show any clear pattern. In any case, we would not be able to infer causation, as usage and outcome variables may be influenced by unobserved characteristics such as students’ motivation and desire to do well in math.

Given the lack of consistent student progress within the system, the value of the Knewton adaptive learning engine is difficult to ascertain. Anecdotally, instructors were skeptical that it made a difference because they observed that students tended to skip around within the program rather than follow the path prescribed by the adaptive engine.

Costs

Costs associated with the online intervention were relatively low. Institutions needed to appoint a program coordinator to determine which students should be eligible and issue invitations to students at the appropriate time (late spring or early summer). A facilitator at each institution had to provide students with access to MFL, answer questions, and issue the prescribed set of “nudges” over a period of 4-6 weeks. The coordinator also bore responsibility for enabling students to retake the placement test and making sure that students were aware of this option. Finally, advisors needed to be on hand to help students who improved their placement scores to decide to enroll in higher level math courses or not, based on the degree of improvement, their majors, and/or possible impact on their fall schedules.

Pearson waived the licensing fee for the students in the study, but under normal circumstances, students (or institutions, if they so chose) would incur a license fee to use MFL. This fee is estimated at $33 for 10-weeks of access. It is worth considering how students might have responded if they had born this cost—one can imagine that some students would have opted not to participate, but one can also imagine that students who had paid the license fee would be more inclined to spend time in the program to make it worth the monetary cost. With conservative assumptions that a facilitator costs $4,000 and 200 students are enrolled in the program, the cost per student would be just over $50—considerably lower than the average of $1,319 cost for campus-based programs.

Qualitative findings from the pilots suggest that technology can be used to bring down the costs of campus-based programs. Instructors at University of Baltimore and Coppin State found that use of MFL saved significant time in grading student work. The coordinator for the latter noted that they were able to enroll many more students without increasing the number of instructors due to use of the technology. These instructor time savings can thus translate into direct per-student cost savings for institutions.

IV. Conclusion

This study found that online summer bridge programs using an adaptive online math program could help students to raise their placement test scores at a relatively low cost to institutions and students. Students responded positively to the offer of the program, with roughly half of students who had access to MyFoundationsLab logging in at least once.

Students are more likely to make significant gains on math tests with at least some face-to-face instruction.

Our findings are consistent with those of other research on summer bridge programs, indicating that these interventions can produce narrowly defined benefits for students but that these advances do not translate into improved long-term academic performance. The degree of impact appears to be somewhat proportional to the intensity of the program. The greater score gains for students in the blended campus-based programs that we observed in the pilots suggest that students are more likely to make significant gains on math tests with at least some face-to-face instruction.

It is possible that we would have found greater impacts on key outcomes with a different software product or with more effective messaging. Conducting similar research using products with significantly different designs would be worthwhile. For example, a system with fewer assessments and less content might be more approachable for students working independently. It would also be worthwhile to dig further into the possibilities for using technology to reduce costs in blended summer programs. Finally, these findings indirectly raise further questions about the predictive value of placement exams, as improvements in math test scores did not lead to gains in math course success.

On balance, we are inclined to conclude that use of MyFoundationsLab in an optional, online program can modestly mitigate one symptom of the college readiness problem—underperformance on placement tests—but is unlikely to address the underlying shortage of skills and knowledge of mathematics.

Acknowledgments

We are truly grateful to the staff of the University System of Maryland and participating institutions for their collaboration in this study. From the University System of Maryland, we would particularly like to thank MJ Bishop, Director of the William E. Kirwan Center for Academic Innovation, and Lynn Harbinson, project manager, for their invaluable contributions to setting up and coordinating the research. At the University of Maryland, Baltimore County, we would like to express our appreciation to William LaCourse, Dean of the College of Natural and Mathematical Sciences (CNMS), Kathy Lee Sutphin, Assistant Dean for Academic Affairs, CNMS, and Raji Baradwaj and Sam Riley, instructors in the mathematics department. From Towson University, we wish to express our deep appreciation to Felice Shore, Associate Professor and Assistant Chair in the Department of Mathematics and the lead coordinator for this study, as well as Raouf Boules, former Chair of the math department and Sheila Orshan, an instructor in the math department. At Bowie State University, we are thankful to Gayle Fink, Assistant Vice-President for Institutional Effectiveness and Vicky Mosely, former program coordinator. At the University of Baltimore we wish to thank Marguerite Weber, former Director of Student Academic Affairs and Academic Initiatives. At Coppin State University, we are thankful to Habtu Braha, Provost and Vice President for Academic Affairs, and Jean Ragin, Assistant Professor in the mathematics department.

This research would not have been possible without the support and contributions of the staff at Pearson. We greatly appreciate their help with implementing the system at our five partner sites and with obtaining and interpreting data.

This report is based on research funded by the Bill & Melinda Gates Foundation. The findings and conclusions contained within are those of the authors and do not necessarily reflect positions or policies of the Bill & Melinda Gates Foundation.

Appendix A: Description of Pilot Studies

University of Baltimore

University of Baltimore offers two summer programs. The first, Summer Bridge, serves students who are admitted “conditionally” to the institution, with grades and test scores slightly lower than the cutoff mark. The program aims to help them fulfill the requirements for enrollment, prepare them for life as a student, and teach them the basic skills they will need to succeed in college. The Summer Bridge program was in its second year and enrolled 80 students during 2013, roughly twice as many as in the first year. The second program, College Readiness Summer Academy, hosts local high school students and aims to get them thinking about applying for and attending college. Since only 13 students enrolled in this program, we decided to exclude those results from our analysis. University of Baltimore’s program covered reading and writing in addition to math.

The six-week Summer Bridge program ran twice, with 47 students in the first session and 33 in the second. Students in both of the six-week instances of the course were divided into control and treatment groups. They were split by gender and then randomly assigned to a group. Students in the control groups had 4.5 hours of face-to-face time and 1.5 hours of online time (using MyMathLab math supplements) each day. Instructors focused their time on “high-touch” activities, engaging with students in small groups or on an individual basis. In the treatment sections, students worked primarily in MyFoundationsLab covering material in math, reading, and writing. They had 2.5 hours of face-to-face time and 3.5 hours of online time per day, allowing for much more independent study time. Online sections were taught in a computer lab, and the students had access to coaches to help them work through the material.

For the group as a whole, 94% of the students were black and 68% were female. The specific numbers in each group can be seen in the table below, along with average SAT scores.

Appendix A Table 1: University of Baltimore Summer Bridge Program

| Overall | Control | Treatment | |

|---|---|---|---|

| Number of classes | 4 | 2 | 2 |

| N | 80 | 40 | 40 |

| Female | 68% | 73% | 63% |

| SAT Math | 385 | 391 | 378 |

| SAT Read | 409 | 404 | 416 |

Students in the treatment group entered the summer bridge program with SAT math scores 12 points lower, on average, than control-group students. However, this difference was not statistically significant.

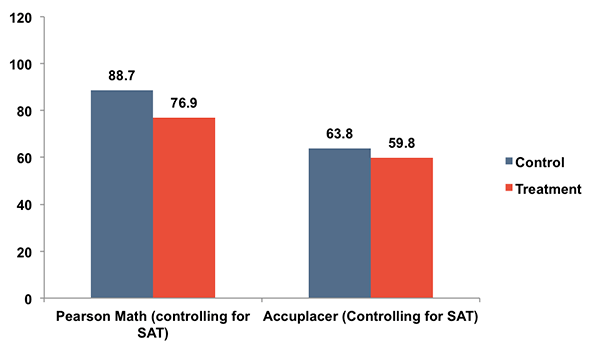

At the end of the program, treatment-group students earned lower scores on both a Pearson Math test[28] and Accuplacer (a widely used math placement test), although only the Pearson Math difference was statistically significant. The difference of 11 points on the Pearson Math test translates into nearly three-quarters of a standard deviation.

Appendix A Figure 1: University of Baltimore Average Post-Test scores

Cost Analysis

The cost data we collected (instructor time sheets and pay rates) suggest that the treatment section cost 31% less per student than the control section for the Summer Bridge Program in 2013. Most of these savings came from the reduced grading time required when using MFL. MFL grades most of the student assignments (including writing assignments), so these instructors spent 67% less time grading than those in the traditional section. There was also 13% savings per student for instructional costs in the sections using MFL, but this seems to be attributable to the mix of staffing, as the total hours of reported instruction per student are the same.

Data from the 2012 session indicates that the 2013 treatment session cost 40% less than the traditional program in 2012. The program coordinator described a number of ways in which they learned from the first offering of the program to make the second iteration more efficient and effective—including better use of technology.

Coppin State

Coppin State offers a residential summer bridge program targeted to incoming first-year students. Students are assigned to Math 97, Math 98, or College Algebra based on Accuplacer test scores and can retake the placement test at the end of the program. The program is four weeks long, and students attend class for three hours per day, five days per week. The students in the treatment sections using MyFoundationsLab were also asked to work on the platform for two hours per day outside of class time, and usage logs show that about a quarter of assignments were in fact submitted after 5:00 pm.

The summer program was traditionally taught in a lecture format using Pearson’s MyMathLab for supplementary assignments. We wished to test the effects of a hybrid format in which students work independently with the MyFoundationsLab platform with a roaming instructor. Because Pearson software was already in use in the summer program, differences between the cohorts were not as clear cut as we would have liked.

Coppin State’s program consisted of 82 students, divided among seven sections. Five sections were part of the control group, with a total of 55 students. The remaining two were part of the treatment group, with a total of 27 students. The students in each of these groups had similar characteristics. A detailed comparison of the groups is available in the table below. One instructor was assigned to the two treatment sections, and three instructors were assigned to the control sections.

Appendix A Table 2: Coppin State

| Overall | Control | Treatment | |

|---|---|---|---|

| Number of classes | 7 | 5 | 2 |

| N | 82 | 55 | 27 |

| Female | 80% | 85% | 70% |

| Average Age | 17.7 | 17.7 | 17.9 |

| Average Parent Income | $51,119 | $48,920 | $56,212 |

| Married | 0% | 0% | 0% |

| Has a child | 1.2% | 0% | 3.7% |

| Has access to Internet | 93% | 95% | 89% |

| SAT Math | 392 | 385 | 408 |

| SAT Read | 430 | 429 | 432 |

| HS GPA | 2.66 | 2.74 | 2.47 |

Students assigned to the treatment group at Coppin State began the summer program with modestly higher Accuplacer scores than students in the control group. By the end of the 4-week program, the difference in Accuplacer scores had increased to 16 points, a difference that was statistically significant. Controlling for the difference in initial Accuplacer scores, as well as any differences in SAT scores, leaves the difference essentially unchanged—in fact, it increases to about 18 points. A difference of 16-18 points is quite large relative to the standard deviation of Accuplacer scores among Coppin State students, which was 14 points for initial scores and 20 for final scores. Students in the treatment group also scored higher on a departmental math exam administered late in the summer program. The difference of 1.2 points on the test translates into about 45 percent of a standard deviation, and is statistically significant at the 10 percent level.

Although these quantitative results in favor of the hybrid sections were very positive, they must be viewed cautiously given that technology was also used as a supplement in control sections. In addition, control and treatment groups had different instructors, so we cannot rule out the possibility that differences in score gains were due to instructor effectiveness. The key finding in this case is that students in the program made considerable gains in their math scores across both formats, and the hybrid format clearly did not have deleterious effects.

Appendix A Figure 2: Coppin State Average Accuplacer Scores

Cost Analysis

In terms of cost impacts, the use of MFL appears to have eliminated the need for certain tasks, such as preparing for lectures as well as creating and grading assignments.

UMBC Year 1 Pilot

UMBC worked with Ithaka S+R and USM to offer two years of a free, summer online student success program targeting entering first-year undergraduates who had pre-matriculated in UMBC and received a range of scores that would typically result in a math placement into or below college algebra. The goal of the program was to help these students place out of remedial mathematics or college-level algebra as a way to improve their times to degree. This was a very important opportunity to those STEM majors who placed into lower-level math courses as data demonstrates that this category of students have a minimal likelihood of obtaining a degree in STEM majors.

Notices were sent to all eligible students inviting them to participate in the pilot project, which was offered as a four-week summer practicum. Of those who signed up to participate, students were randomly assigned to treatment and control groups. Students in the treatment cohort were provided with access to MyFoundationsLab and received guidance and encouragement via email from instructors during the program. Students in the control group had access to a Blackboard site with static instructional materials and some encouragement via email from instructors during the program.

In the year one pilot, students were invited to attend an on-campus orientation session, which was poorly attended and followed up with copies of the planned slide presentation. In year two, the subject matter of the orientation was incorporated into a slide presentation with a video component, and sent electronically to all students before the program launched. All students, regardless of their participation levels, were invited to retake the placement exam in August with the assurance that only the higher score would count and that those who placed out of their planned math courses would have the opportunity for assistance in changing their schedules. In the year one pilot, 70 students participated in the program (35 in MFL and 35 in the Control Group).

As an end-of-summer score, we used the students’ most recent placement test score. For students who retook the test, it became their new score. For students who did not retake the test, it was simply their original score. Consequently, our primary outcome variable reflects both decisions about whether to retake the test as well as performance on the retake among those who took advantage of that opportunity.

After retaking the test, treatment group students had statistically indistinguishable scores from the control group—the difference of about 2 points was not significantly different from zero. Treatment-group students were less likely to retake MAA than control-group students, but the difference was not statistically significant. It should be noted that some UMBC students who placed in college-level algebra improved their math placement scores enough to place into Calculus I, allowing those who were STEM majors to meet course pre-requisites and follow recommended course sequences.

Appendix A Figure 3: UMBC Average Placement Test Scores

Cost Analysis

The design of UMBC’s program results in relatively modest costs. The control section required an instructor to upload the established list of key algebraic concepts to review and upload either materials (year 1 pilot) or list the UMBC’s Learning Resource Center website (field test) to the Blackboard page with periodic reminders encouraging concept review sent out via email. The treatment section required instructors to monitor MyFoundationsLab usage, address access issues, be available to answer students’ questions, and send out periodic emails encouraging students to engage with the system. Special, three-day access was provided to all students to retake the math placement test. In addition, a system of advising was established with campus advisors to ensure that students with improved placement scores made the best decisions regarding their fall academic schedules. Two math instructors were hired on a part-time basis for the program. Under normal circumstances, the cost of a practicum course and MFL license costs would also need to be taken into account.

Appendix B: Suggested Messaging to Students

I. Encouragement to join the program

1. Notify students that they have been selected for the treatment group. Introduce the program and highlight the benefits of participating. Institutions included this letter with their IRBs. If not, use the Towson sample on page 4.

2. Remind students 3-4 days before the program begins that they have been selected to receive the My Foundations Lab software. Include information about the program and the benefits of participating. Pearson handouts can be used for marketing purposes. (pages 6-8)

II. Introduction to be sent by monitors on first day [sample text]

Hi _____,

Welcome to the [name] program. I am excited to offer you this opportunity to brush up on your math skills before you enter college in the fall. Please find below some advice for how to get the most from this opportunity.

1. We recommend spending 5-10 hours per week on the platform.

2. Space out your work over multiple days.

3. Work through the materials in the recommended order – we know it’s tempting to jump straight to the quizzes, but you probably won’t get good results that way [or something to that effect]

4. We will monitor your progress online and check in with you twice a week to recommend how to proceed based on your performance.

5. [Login information]

III. Nudges during the program.

Email the students each Monday, Wednesday and Friday. A few different emails should be sent based on the following groups. These should be modified a little each time so they do not get stale or come across as canned.

1. Students with mastery averages below 70% in the past week

Hi___,

I noticed that you are still working on reaching the mastery level. Here are a couple tips to improve your progress…

2. Students with mastery average between 70% and 80% in the past week

Hi ___,

It looks like you’ve been working hard on the MFL work. I noticed that you are almost at the mastery level for some of your work. Keep working hard to get to the 80% mark! The more you master in this program, the better positioned you will be to succeed in your fall math courses and move towards your degree more quickly.

3. Students with mastery averages above 80% in the past week

Hi___,

Congratulations on your achievements with the MFL work! It looks like you are doing well on the exercises and assessments so far, and I want to encourage you to continue working hard on these. If you keep this up, I think you will do well in your math courses this fall and maybe even place out of a math course.

4. Students who have not logged onto the platform since the last email

Hi ____,

I noticed that you have not logged onto MFL in a few days. I want to make sure you don’t fall behind. It’s important to work on these materials often because they can help you progress more quickly through your math courses. Many of your classmates are making a lot of progress and I don’t want you to miss out on this opportunity!

5. Students who have never logged onto the platform [Send weekly]

Hi ______,

I noticed that you have not accessed the MFL math resources [institution] is providing to help you prepare for the fall. Although you may be busy this summer, I want to make sure you know how working on this program, even for a short amount of time, could help you. [Mention some of the benefits.] I’ve been checking in with a lot of your classmates, and see that many of them are making a lot of progress. I don’t want you to miss out!

IV. Encouragement to Retake the Placement Exam (Email followed by phone call from either monitor or program coordinator)

For those who used MFL actively [define cutoff]:

Congratulations on completing the MFL program! Based on your hard work on these materials I think you have improved upon several of your math skills and will be better prepared to start college this fall. We are offering you an opportunity to retake the placement exam. While completing this program does not guarantee placement into a higher math course, we are offering you the opportunity to retake the placement test to try and place into a higher math course. In addition, by retaking this test, you will be able to help us measure the value of this program. If you place into a higher math course, we will work with you to switch into this new course for the fall.

For those who used MFL but not actively [set cutoff]

I hope you enjoyed working with MyFoundationsLab this summer! Regardless of how much time you spent working on the program, there is still a good chance that you can place into a higher level math course if you retake the placement test. We are offering you an opportunity to retake the placement exam, and only your highest score will count. In addition, by retaking this test, you will be able to help us measure the value of this program. If you place into a higher math course, we will work with you to switch into this new course for the fall.

For those who never used MFL:

I hope you had a good summer! We would like to offer you the opportunity to retake your math placement test. Although you may not have done anything over the summer to improve your math skills, I still think it would be valuable for you to retake this exam. Many students do better the second time when re-testing, and if your test score improves significantly, you may be able to take a higher level math course and move more quickly towards your degree. Advisors will work with you to make sure you get into the right class.

Appendix C: Sample Invitation Letter

Dear Student,

A strong foundation in algebra can make a significant difference in an individual’s college experience. [Institution] is making every effort to explore a variety of innovations that can help students hit the ground running in their required mathematics courses.

You were selected to participate in the Summer Refresher Opportunity (SRO) study as a result of your placement test score. If you agree to participate, through our assistance, you will enroll in a five week on-line program free of charge to you. SRO will make use of a self-guided mathematics online program called MyFoundationsLab (MFL). The great thing about MFL is that it is tailored to your specific needs based on an initial diagnostic you’ll take when you first enroll. The program will give you a “path” through algebra skills you need to learn with opportunities to watch videos, see how different problems are solved, do practice problems on your own, and get immediate feedback.

Here are the details:

During the week of June 16th, a “coach” will e-mail you directions for getting enrolled and will answer questions, should you have trouble using the online program at anytime.

You will enroll no later than June 23rd. You will have five weeks to work on the material, with SRO ending on July 25th.

You may work with the program to the extent you want. There is no limit to how much time you might spend on it; if you choose to spend less time, there is no penalty.

Throughout the five weeks, you may hear from the coach intermittently, noting your progress and encouraging you to keep on!

You will be asked to complete a brief survey.

With the usual placement testing policy waived for this study, you will have the opportunity to retake the online mathematics placement test during a 5-day window after the project ends on July 25 at no cost to you. If your new score places you into a higher course than originally planned, you will be advised and assisted to register for a more appropriate course.

Your participation in this project offers a unique and beneficial opportunity both for you and also for [Institution], as it will help us determine how best to serve college students at [Institution] and elsewhere.

If you would like to participate, please e-mail or call us right away! Call [tel number] and let them know you’re calling about the “SRO project” or e-mail me directly at [contact email].

We will be confidentially collecting data about your usage of the program, answers to surveys, and progress in mathematics at [Institution], but only anonymous data will be used in data analysis to determine the impact of the SRO on everyone. At no time will your name be used. Neither your participation level, nor your performance in this program can have any impact (positive or negative) on your college record, other than potentially improving your placement results. Furthermore, your participation in any of the activities mentioned would be completely voluntary and you can quit at any time.

As soon as we hear from you, we will begin the enrollment process, including getting you up and running with the online software program. We will need to hear from you no later than Thursday, June 19, in order to ensure that you are ready to begin the program by June 23rd. If you have any questions about the SRO, you may contact me by telephone [number] or e-mail at [email]. You may also contact [name], chairperson of [Institution’s] Institutional Review Board at [phone number].

Thank you for considering participation in the Summer Refresher Opportunity. I believe it offers a great way for you to start your college career at [Institution]!

Sincerely,

[Program administrator / math faculty member]

Appendix D: More Detailed Data for Field Tests

Appendix D Table 1: Student Characteristics by Institution and Treatment Group

| Bowie State | Towson | UMBC | ||||

|---|---|---|---|---|---|---|

| Control | Treatment | Control | Treatment | Control | Treatment | |

| SAT Math | 419.661 | 384.151 | 512.281 | 506.100 | 563.404 | 542.449 |

| SAT Verbal | 434.915 | 385.151 | 531.614 | 531.467 | 588.936 | 578.163 |

| SAT V Missing | 9% | 41% | 17% | 15% | 19% | 16% |

| SAT M Missing | 9% | 41% | 17% | 15% | 19% | 16% |

| HS GPA | 3.478 | 3.446 | 3.620 | 3.670 | ||

| HS GPA Missing | 3% | 2% | 12% | 14% | ||

| White | 0% | 0% | 68% | 61% | 34% | 43% |

| Black | 88% | 89% | 16% | 20% | 25% | 38% |

| Hispanic | 3% | 2% | 7% | 6% | 14% | 5% |

| Asian | 0% | 4% | 2% | 4% | 9% | 12% |

| Other Race | 8% | 6% | 7% | 8% | 18% | 2% |

| Race Missing* | 9% | 41% | 3% | 2% | 3% | 0% |

| Female | 53% | 68% | 67% | 68% | 59% | 57% |

| Age | 17.831 | 18.019 | 18.170 | 18.194 | 18.707 | 18.103 |

| Age Missing | 9% | 41% | 3% | 2% | 0% | 0% |

| Inc <$50,000 | 45% | 65% | 24% | 25% | ||

| Inc $50,000 to $100,000 | 38% | 22% | 26% | 25% | ||

| Inc > $100,000 | 18% | 14% | 50% | 49% | ||

| Income Missing | 14% | 43% | 17% | 14% | ||

| Pell Eligible | 34% | 36% | ||||

| Pell Status Unknown | 14% | 19% | ||||

| Initial Placement Score | 61.262 | 64.611 | 12.507 | 12.472 | 18.690 | 19.052 |

| Observations | 65 | 90 | 345 | 352 | 58 | 58 |

| *Percentages of students by race are for those for whom data was available. | ||||||

Appendix D Table 2: Breakdown of Towson Participation by Placement Test Score

| Placement Score* | % yes | % logged on | N |

|---|---|---|---|

| 0-5 | 77% | 69% | 13 |

| 6-9 | 67% | 60% | 52 |

| 10-11 | 53% | 47% | 43 |

| 12-16 | 53% | 43% | 244 |

| Total | 56% | 47% | 352 |

| *Maximum possible score is 25 | |||

Appendix D Table 3: Towson Outcomes Detail

| Control | Treatment | Difference | |

|---|---|---|---|

| Retook placement test | 10% | 23% | 13% |

| Pretest score | 12.5 | 12.5 | 0.0 |

| Max(pretest, post-test) | 13.1 | 13.7 | 0.7 |

| GPA, all graded courses | 2.8 | 2.8 | 0.0 |

| GPA, math courses | 2.4 | 2.4 | 0.0 |

| Took any math course | 84% | 85% | 1% |

| Passed any math course | 75% | 74% | 0% |

| Number of students | 345 | 352 | |

| *Differences that are statistically significant at p<0.10 are bolded. | |||

Appendix D Table 4: Towson Treatment-Control Differences

| Placement Score (Pre-Test) | ||||

|---|---|---|---|---|

| All Students | 0-9 | 10-11 | 12-16 | |

| Retook placement test | 0.13 | 0.10 | 0.14 | 0.13 |

| GPA, all graded courses | 0.03 | 0.19 | 0.07 | -0.02 |

| GPA, math courses | -0.02 | 0.18 | -0.22 | -0.03 |

| Passed any math course | 0.00 | 0.11 | -0.12 | -0.01 |

| Started above algebra | 0.02 | 0.13 | -0.05 | 0.01 |

| Passed above algebra | 0.00 | 0.02 | 0.02 | -0.01 |

| Started 200-level | -0.02 | 0.01 | 0.05 | -0.04 |

| Passed 200-level | -0.03 | 0.01 | 0.05 | -0.05 |

| Number | 697 | 128 | 86 | 483 |

| *Differences that are statistically significant at p<0.10 are bolded. | ||||

Appendix D Table 5: Bowie State University Outcomes Detail

| Control | Treatment | Difference | |

|---|---|---|---|

| Retook placement test | 6% | 30% | 24% |

| Pre-test score | 61.3 | 64.6 | 3.3 |

| Max(pre-test, post-test) | 61.7 | 68.1 | 6.4 |

| GPA, all graded courses | 2.2 | 2.3 | 0.1 |

| GPA, math courses | 2.0 | 2.1 | 0.1 |

| Took any math course | 95% | 96% | 0% |

| Passed any math course | 78% | 81% | 3% |

| Number of students | 65 | 90 | |

| *Differences that are statistically significant at p<0.10 are bolded. | |||

Appendix D Table 6: UMBC Outcomes Detail (year 2 field test only)

| Control | Treatment | Difference | |

|---|---|---|---|

| Retook placement test | 62% | 36% | -26% |

| Pre-test score | 18.7 | 19.1 | 0.4 |

| Max(pre-test, post-test) | 22.0 | 20.9 | -1.1 |

| GPA, all graded courses | 2.9 | 2.8 | 0.0 |

| GPA, math courses | 2.3 | 2.2 | -0.1 |

| Took any math course | 83% | 90% | 7% |

| Passed any math course | 72% | 79% | 7% |

| Number of students | 58 | 58 | |

| *Differences that are statistically significant at p<0.10 are bolded. | |||

Appendix D Table 7: Detail on Students who Retook the Placement Test Across Sites